This jam is now over. It ran from 2023-03-24 17:00:00 to 2023-03-26 23:30:00. View results

A weekend for exploring AI & society!

With collaborators from OpenAI, Centre for the Governance of AI, Existential Risk Observatory and AI Objectives Institute, we present cases that you will work in small groups to find solutions for during this weekend!

🏆$2,000 on the line

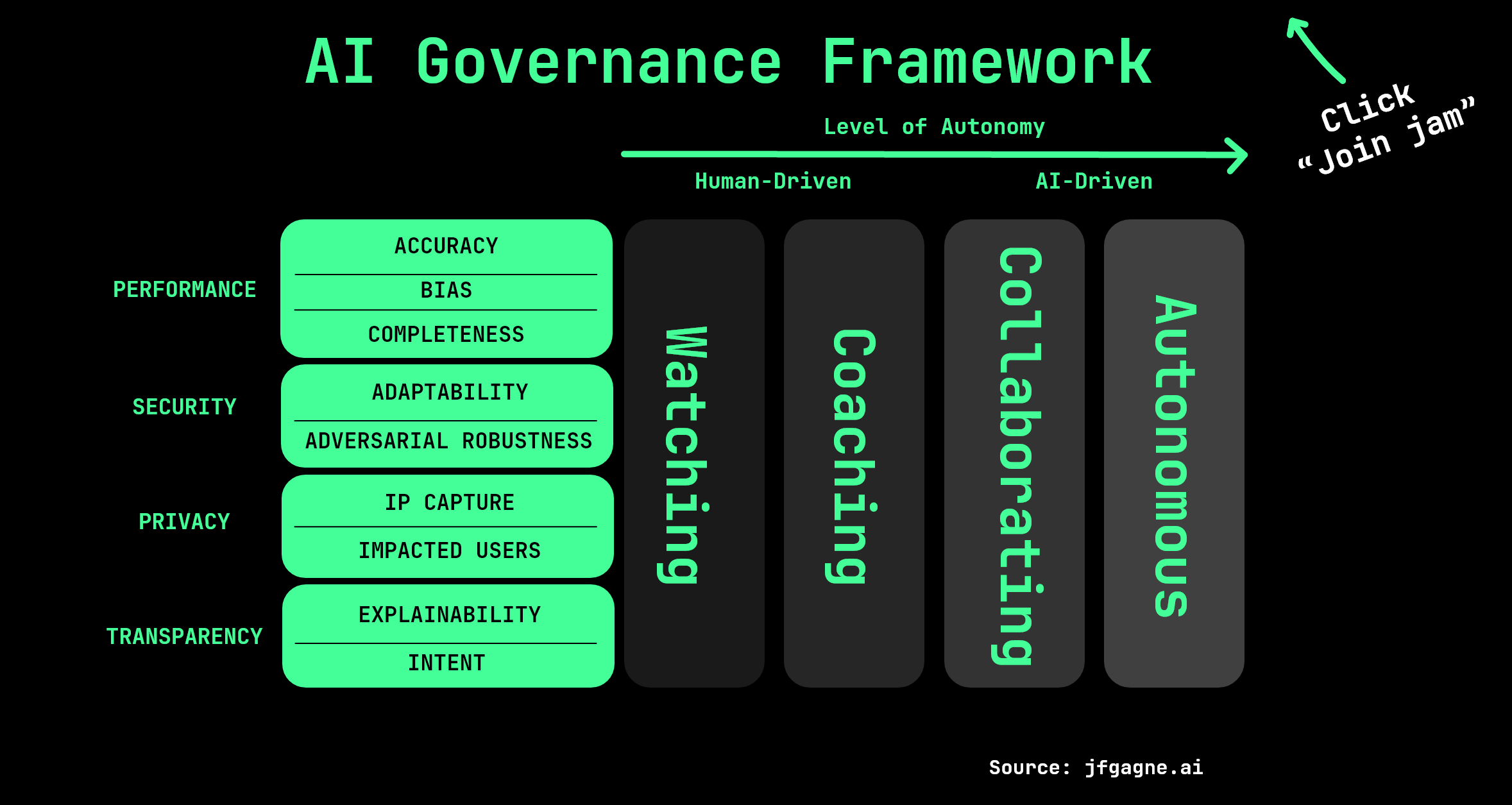

AI Governance

There are immense opportunities and risks with artificial intelligence. In this hackathon, we focus on how we can mitigate and secure against the most severe risks using strategic frameworks for AI governance.

Some of these risks include labor displacement, inequality, an oligopolistic global market structure, reinforced totalitarianism, shifts and volatility in national power, strategic instability, and an AI race that sacrifices safety and other values [1].

The objective of this hackathon is to research strategies and technical solutions to the global strategic challenges of AI.

- Can we imagine scenarios where AI leads to events that cause a major risk for humanity? [2, 3, 4]

- Can we predict when AI will reach specific milestones and how future AI will act and behave from a technical perspective? [5, 6, 7]

- Can we find warning signs that should warn us that we are soon to hit a state of extreme risk? [8, 9, 10] And figure out when they actually work [11].

These and more questions will be the target of your 48 hours! With the potential to turn into a technical report or a blog post, we look forward to see what you come up with.

Reading group

Join the Discord above to be a part of the reading group where we read up on the research within AI governance! The preliminary reading list is:

- AI Governance: A Research Agenda (Dafoe, 2018) from the Centre for Governance of AI

- Current and Near-term AI as a Potential Existential Risk Factor (Bucknall & Dori-Hacohen, 2022)

- Framing AI strategy (Stein-Perlman, 2023)

- Let's Think About Slowing Down AI (Grace, 2022)

Local groups

If you are part of a local machine learning or AI safety group, you are very welcome to set up a local in-person site to work together with people on this hackathon! We will have several across the world (list upcoming) and hope to increase the amount of local spots. Sign up to run a jam site here.

You will work in groups of 1-6 people within our hackathon Discord and in the in-person event hubs.

Project submission & judging criteria

You write up your case proposals as PDFs using the template and upload them here on itch.io as project submissions for this hackathon. See how to submit your project.

We encourage all participants to rate each others' projects on the following categories:

| Overall | How good are your arguments for how this result informs our strategic path with AI? How informative are the results for the field of AI governance in general? |

| AI governance | How informative is it in the field of AI governance? Have you come up with a new method or found completely new strategic frameworks? |

| Novelty | Have the results not been seen before and are they surprising compared to what we expect? |

| Generality | Do your research results show a generalization of your hypothesis? E.g. have you covered all relevant strategic cases around your scenario and do we expect it to accurately represent the case. |

The final winners will be selected by the judges who have also been part of creating the cases with us.

Inspiration

Follow along on the YouTube and see the reading list above. Other resources from the one-pagers can be found here:

- GPT-4 Technical Report (openai.com)

- Planning for AGI and beyond (openai.com)

- Our approach to alignment research (openai.com)

- What a Week! GPT-4 & Japanese Alignment (apartresearch.com)

Submissions(47)

No submissions match your filter