Play project

Backup Transformer Heads are Robust to Ablation Distribution's itch.io pageResults

| Criteria | Rank | Score* | Raw Score |

| Interpretability | #1 | 4.222 | 4.222 |

| Reproducibility | #1 | 4.556 | 4.556 |

| Judge's choice | #2 | n/a | n/a |

| Generality | #10 | 2.778 | 2.778 |

| ML Safety | #10 | 2.778 | 2.778 |

| Novelty | #11 | 2.889 | 2.889 |

Ranked from 9 ratings. Score is adjusted from raw score by the median number of ratings per game in the jam.

Judge feedback

Judge feedback is anonymous.

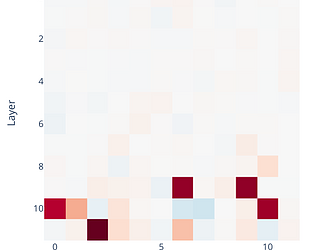

- Cool project! The direction that feels most exciting to me is understanding WHY backup (or backup backup!) heads react the way they do - is there a specific direction that matters? What happens if we replace the ablated head with the average of that head across a bunch of inputs of the form A & B ... A ... -> B for diff names? How are backup or backup backup heads different - does attn change? Does it have significant self-attention? The bit I found most exciting about this work is the discovery of backup backup heads - this is: a) Hilarious b) Fascinating and unexpected. (Also, hi Lucas!) -Neel

Where are you participating from?

["Online"]

What are the names of your team member?

Lucas Sato, Gabe Mukobi, Mishika Govil

What are the email addresses of all your team members?

lucasjks@gmail.com, gmukobi@stanford.edu, mishgov@stanford.edu

What is your team name?

Klein Bottle

Leave a comment

Log in with itch.io to leave a comment.

Comments

No one has posted a comment yet