Play tool

Identifying a Preliminary Circuit for Predicting Gendered Pronouns in GPT-2 Small's itch.io pageResults

| Criteria | Rank | Score* | Raw Score |

| Mechanistic interpretability | #1 | 4.571 | 4.571 |

| Judge's choice | #2 | n/a | n/a |

| Generality | #4 | 3.286 | 3.286 |

| ML Safety | #4 | 3.429 | 3.429 |

| Reproducibility | #4 | 4.143 | 4.143 |

| Novelty | #8 | 3.000 | 3.000 |

Ranked from 7 ratings. Score is adjusted from raw score by the median number of ratings per game in the jam.

Judge feedback

Judge feedback is anonymous and shown in a random order.

- Fun project! Nice work, and cool to see my demo + ACDC used in the wild :) I think the 5% of (head, position) components used figure is a bit inflated - my guess is that most tokens in the sentence don't actually matter to the task, so that automatically disqualifies many (head, position) pairs (I'd love to be proven wrong!). I found the claim that name -V> is -K> 't matters a lot interesting, in particular the importance of the key connection - this is surprising to me! I'm guessing there's some kind of grammatical circuit? I also appreciated the discussion of the importance of the threshold in finding the algorithm, interesting to see the importance of this kind of hyper-parameter tuning, and I think this kind of empirical finding is an important contribution. My guess is that what's going on is that there's a significant chunk of the circuit devoted to realising that there's a name in the previous sentence, and a pronoun that comes next, and to attend to the name, and then some extra effort to look up the gender of that name and map it to a pronoun. I would personally have made a baseline distribution with a name of the opposite gender rather than "That person" to control for the "discover it's a pronoun identification task + find the name" part. I'd also be interested to look at the attention patterns for the important heads, on both the gendered and baseline distribution, and at how this changes after key connections are patched in or out. But yeah, overall, solid work, well executed, and interesting findings from a weekend - very much the kind of work that I wanted to come out of this hackathon! I hope you continue investigating this after the weekend :)

- This is really great work utilizing the automatic circuit identification algorithm of Conmy. There's a lot to dive into here and it seems the task selection provides a good case for running the algorithm. I would be curious to see your takes on what the next steps for the circuits identification algorithm could be and would love to see this project taken further! Really good work.

What are the full names of your participants?

Chris Mathwin, Guillaume Corlouer

Does anyone from your team want to work towards publishing this work later?

Maybe

Where are you participating from?

London EA Hub

Leave a comment

Log in with itch.io to leave a comment.

Comments

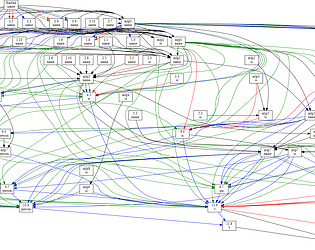

Thank you for the great feedback :D, I have realized that there was a typo on pg. 12/14 regarding the information flow graphs. This has since been updated :)

It's pretty cool, I would try implementing something similar using TRACR and also look for circuits in bigger / other models. This might indicate what is crucial for the computation. Maybe this graph is invariant across different models due to its success, or it is actually completely different for some other reasons -> Both results would be super interesting and give evidence to a fundamental question of MI: 'to what extent can we study toy/small models to learn how large models work?'

I will also add, that often when you reverse software you end up with a computational graph and its structure alone is enough to give insights of the computation.

This is a really nice connection. Thanks for this!

Fascinating, I've not seen Conmy's automatic circuit discovery tool before https://arthurconmy.github.io/automatic_circuit_discovery/

And you can imagine I searched around quite a bit for exactly that!