Play project

We Discovered An Neuron's itch.io pageResults

| Criteria | Rank | Score* | Raw Score |

| Judge's choice | #1 | n/a | n/a |

| Reproducibility | #1 | 4.400 | 4.400 |

| Mechanistic interpretability | #2 | 4.400 | 4.400 |

| Novelty | #3 | 4.200 | 4.200 |

| Generality | #11 | 2.800 | 2.800 |

| ML Safety | #11 | 2.800 | 2.800 |

Ranked from 5 ratings. Score is adjusted from raw score by the median number of ratings per game in the jam.

Judge feedback

Judge feedback is anonymous and shown in a random order.

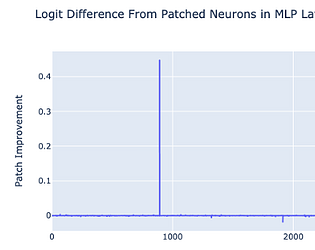

- Very cool project! This aligns with what max activating dataset examples finds: https://www.neelnanda.io/anneuron (it should be on neuroscope but I ran out of storage lol) I'm generally pretty surprised that this worked lol, I haven't seen activation patching seriously applied to neurons before, and wasn't sure whether it would work. But yeah, really cool, especially that it found a monosemantic neuron! I'd love to see this replicated on other models (should be pretty easy with TransformerLens) Tbh, the main thing I find convincing in the notebook is the activation patching results, I think the rest is weaker evidence and not very principled. Some nit picks: - ablation means setting to zero, not negating. Negating is a somewhat weird operation that seems maybe more likely to break things? Neuron activations are never significantly negative, because GELU tends to give positive outputs. IMO the most principled is actually a mean or random ablation (described in https://neelnanda.io/glossary ) We already knew that the residual had high alignment with the neuron input weights, because it had a high activation! Just plotting the neuron activation over the text would have been cool, likewise plotting it over some other randomly chosen text. It'd have been interesting to look at the direct effect on the logits, you want to do W_out[neuron_index, :] @ W_U and look at the most positive and negative logits. I'm curious how much this composes with later layers vs just directly contributing to the logits It would also have been interesting to look at how the inputs to the layer activate the neuron. But yeah, really cool work! I'm surprised this worked out so cleanly. I'm curious how many things you've tried? I think this would be a solid thing to clean up into a blog post and a public Colab, and I'd be happy to add it to a page of cool examples of people using TransformerLens Oh, and the summary under-sells the project. "Encoding for an" sounds like it activates ON an, not that it predicts an. The second is much cooler! -Neel

- This is an amazing product for just a weekend and it represents a perfect deep dive into how a specific mechanism works. All the steps you would want to check using the TransformerLens library are there, and they are very elaborate. Ablation, activation patching, weight modulation, and the qualitative analysis of how easy it is to get them to say it in the first place. Great work!

What are the full names of your participants?

Joseph Miller, Clement Neo

What is you and your team's career stage?

Joseph - research alignment AI engineer, Clement - 3rd year undergraduate

Does anyone from your team want to work towards publishing this work later?

Maybe

Where are you participating from?

London EA Hub

Leave a comment

Log in with itch.io to leave a comment.

Comments

Very cool! can you maybe copy this capability from gpt-2 large to smaller models?

10/10 for the title haha