Can you give us a brief description of your "mashing".....i am gathering from other comments that you averaged? and rounded? render to 16? depths.... can you shift these depth locations on each sequential frame?... be a major step

So DoomLoader basically ported DOOM to Unity. What I'm doing is running DoomLoader in the background (so it's still a 3D game, technically), but I overlay a UI texture across the screen. I have a script that takes the 3D camera feed, turns it into a render texture (just an array of the pixels that the camera would normally display to the player), applies the algorithm from the paper, and then feeds that to the UI texture as the end display.

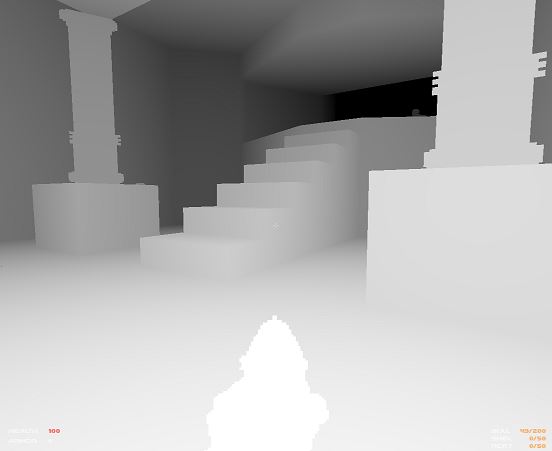

The key is that the algorithm needs a depth map. So I made a couple shaders that almost all the objects are now using, which ignore the DOOM textures and instead make all objects greyscale, depending on their distance from the player. So underneath the stereogram, the game is just playing, and every frame looks like a depth map. I'll post an image of that below, with a high "render distance" setting.

The rounding that I've mentioned is in reference to the paper, specifically line 27 if you look at the code in Figure 3 here. The equation is s = round((1-mu*z) * E/(2-mu*z)), which will make more sense if you read the rest.

okay i understand, so it converts to 4 bit grey scale..thus 16 depths.......so it needs to convert it to 8 bit, thus 256 depths...but grab every 16th layer on one frame, then shift forward one bit and grab every 16th +1 depth on the next....16th +2 on the next..and so on...that seems relatively simple....that means each 256 depths will be displayed about 4 time every second.....that sounds perfect....could implement fibonacci later but keep it simple for now i suppose.

It's not quite that simple. I'm already using full 32 bit textures, so 8 bit for the grey. Greyness corresponds to z in the algorithm (z is a value between 0 and 1, representing distance from the player). It's not the granularity of the z values, but the fact that two different z values will round to the same separation value in the algorithm.

This is just a standard way to round to the nearest int, which needs to be done because you can't have a separation of 60.5 pixels, for example, because pixels come in wholes.

So the only things I can change that would affect the layers are DPI and mu (depth of field). There are combinations of these that give a better spread of separation, but it often turns out messing up the stereogram.

You have obviously chosen the depth of field and DPI values carefully, if you can cycle through all the values that give a good separation you may approximate a 3D scene

You could have a seperation of half a pixel, it's just this pixel takes on 2 values over 2 consecutive frames.........

To this end you could have any fraction of a pixel within reason of frame rate, bit depth and human perception...