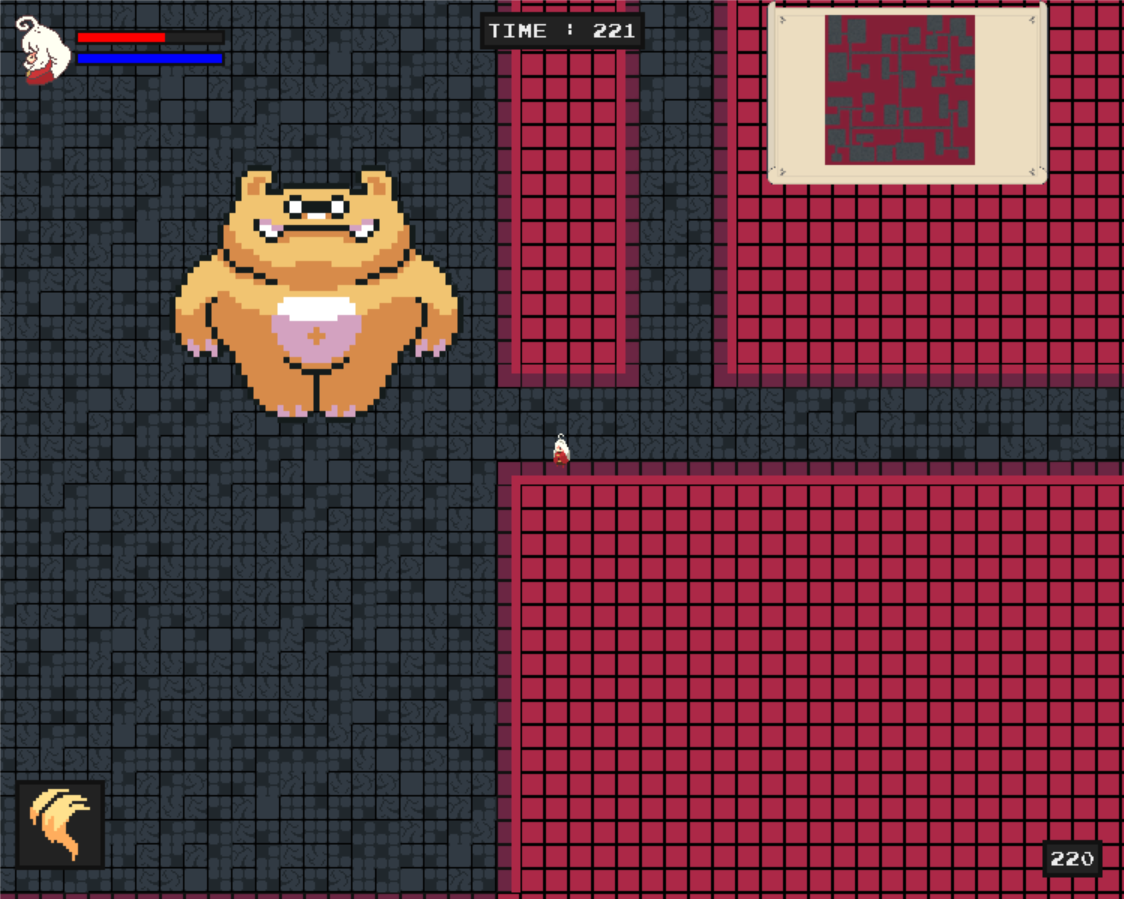

I'm impressed by the TTS and LLM integrations. The enemies were too large and got caught on the doorways so that I could just sit in the hall and kill them. One thing to look at for the item generation is using a stable diffusion model, that you could train with your tileset, then ask it for an item fitting a specific description. Same for enemies with background removal, although I admit animations for enemies like that would be a challenge.

Viewing post in EVERCHANGING QUEST jam comments

Thank you for testing it ;) I'm a data scientist so my idea was just to demonstrate the potential use of LLM. I actually never made any fancy games prior to this jam, so the gamplay is FAR from perfect. it's not meant to be hard or anything. just demonstrate something new.

I agree with you analysis, I tried to explain it, but if I had more time I would use transformer agents with falcon 7B and use diffusers tools to generate assets. Or another approach that I considered would have been to use a ClipSeg tool inside an agent to find a usable asset out of a big spritsheet of objects. In the end, I did not get the time to produce what I was thinking about.Thus this is only a POC

Ya, 48 hours is tight. We ended up getting Falcon 7B using basic descriptions to then prompt Stable diffusion.

What we did was we had the gameplay set, and specific strings used to define the enemies (e.g. "A weak long ranged enemy") then used the setup to ask the LLM for

"give me the name of an enemy that would be in a world {world description} fighting against {character description} with the description {enemy description}"

That then chained into

"give me the visual description of the character {Enemy Name} in the world {world description}"

Which chained to asking stable diffusion for an image with background removed of

"A figure in the center of frame. {Enemy Visual Description}. 4K. colorful. High Quality..."

I'd already been using this kind of chaining prompts in other projects. It does require a decent amount of prompt engineering to get them just right, as a prompt early in the chain being off makes the ones later in the chain even further off.

Ah, that's a nice pipeline, it seems indeed very noise sensitive as any unprecented output in the early stages gets amplified at the end of the pipeline. in your case if the guidance toolkit was working properly ( chich is not the case, I tested multiple times ) it would have been interesting to have stable prompts :https://github.com/microsoft/guidance

But I judge by results, and in your case it worked well.