the both are windows 10 x64

lbarasc

Creator of

Recent community posts

hi, i dowloaded some FLUX models but when i want to delete it from the user interface of stabilitymatrix, i cannot, nothing happens.

i manualy remove models from the directory data\models but when i start stabilitymatrix, the flux models are always here in the model browser and i cannot download or remove them.

please help me, thank you

Hi, thank you for your answer !

i unzip your localgpt archive to d:\localgpt\

that's ok to ingest a little txt file, it's works !

But it seems i have the same error with i launch 2.bat.

I edit the 2.bat and at the line "set PATH=", i add to the end my path, so i have :

set PATH=C:\Windows\system32;C:\Windows;%DIR%\git\bin;%DIR%;%DIR%\Scripts;D:\LocalGPT\localGPT\models\models--TheBloke--Llama-2-7b-Chat-GGUF\snapshots\191239b3e26b2882fb562ffccdd1cf0f65402adb;

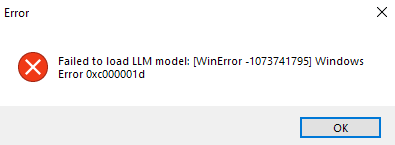

but i have " Could not load Llama model from path: ./models\models--TheBloke--Llama-2-7b-Chat-GGUF\snapshots\191239b3e26b2882fb562ffccdd1cf0f65402adb\llama-2-7b-chat.Q4_K_M.gguf. Received error [WinError -1073741795] Windows Error 0xc000001d (type=value_error)"

i don't understand where is the problem ! i delete the folder models--TheBloke--Llama-2-7b-Chat-GGUF\snapshots\191239b3e26b2882fb562ffccdd1cf0f65402adb\llama-2-7b-chat.Q4_K_M.gguf and 2.bat re download the model (create folders and sub folders correctly) but there is alway the same error when trying to load model !

Please help.

Thank you for all, and sorry to be too noob !

When i ingest a document (constitution.pdf from localgpt github) , i don't have error, but it seems to be stucked after the message loaded, the cursor blinked but nothing else...

ingest.py:147 - Loading documents from D:\LocalGPT\localGPT/SOURCE_DOCUMENTS

Importing: constitution.pdf

D:\LocalGPT\localGPT/SOURCE_DOCUMENTS\constitution.pdf loaded.

So, i run the second bat, here i have a error :

CUDA extension not installed.

CUDA extension not installed.

2024-05-02 06:37:16,651 - INFO - run_localGPT.py:244 - Running on: cpu

2024-05-02 06:37:16,652 - INFO - run_localGPT.py:245 - Display Source Documents set to: False

2024-05-02 06:37:16,652 - INFO - run_localGPT.py:246 - Use history set to: False

2024-05-02 06:37:18,572 - INFO - SentenceTransformer.py:66 - Load pretrained SentenceTransformer: hkunlp/instructor-large

load INSTRUCTOR_Transformer

D:\LocalGPT\miniconda3\lib\site-packages\torch\_utils.py:776: UserWarning: TypedStorage is deprecated. It will be removed in the future and UntypedStorage will be the only storage class. This should only matter to you if you are using storages directly. To access UntypedStorage directly, use tensor.untyped_storage() instead of tensor.storage()

return self.fget.__get__(instance, owner)()

max_seq_length 512

2024-05-02 06:37:21,224 - INFO - run_localGPT.py:132 - Loaded embeddings from hkunlp/instructor-large

2024-05-02 06:37:21,655 - INFO - run_localGPT.py:60 - Loading Model: TheBloke/Llama-2-7b-Chat-GGUF, on: cpu

2024-05-02 06:37:21,655 - INFO - run_localGPT.py:61 - This action can take a few minutes!

2024-05-02 06:37:21,656 - INFO - load_models.py:38 - Using Llamacpp for GGUF/GGML quantized models

Traceback (most recent call last):

File "D:\LocalGPT\localGPT\run_localGPT.py", line 285, in <module>

main()

File "D:\LocalGPT\miniconda3\lib\site-packages\click\core.py", line 1157, in __call__

return self.main(*args, **kwargs)

File "D:\LocalGPT\miniconda3\lib\site-packages\click\core.py", line 1078, in main

rv = self.invoke(ctx)

File "D:\LocalGPT\miniconda3\lib\site-packages\click\core.py", line 1434, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "D:\LocalGPT\miniconda3\lib\site-packages\click\core.py", line 783, in invoke

return __callback(*args, **kwargs)

File "D:\LocalGPT\localGPT\run_localGPT.py", line 252, in main

qa = retrieval_qa_pipline(device_type, use_history, promptTemplate_type=model_type)

File "D:\LocalGPT\localGPT\run_localGPT.py", line 142, in retrieval_qa_pipline

llm = load_model(device_type, model_id=MODEL_ID, model_basename=MODEL_BASENAME, LOGGING=logging)

File "D:\LocalGPT\localGPT\run_localGPT.py", line 65, in load_model

llm = load_quantized_model_gguf_ggml(model_id, model_basename, device_type, LOGGING)

File "D:\LocalGPT\localGPT\load_models.py", line 56, in load_quantized_model_gguf_ggml

return LlamaCpp(**kwargs)

File "D:\LocalGPT\miniconda3\lib\site-packages\langchain\load\serializable.py", line 74, in __init__

super().__init__(**kwargs)

File "pydantic\main.py", line 341, in pydantic.main.BaseModel.__init__

pydantic.error_wrappers.ValidationError: 1 validation error for LlamaCpp

__root__

Could not load Llama model from path: ./models\models--TheBloke--Llama-2-7b-Chat-GGUF\snapshots\191239b3e26b2882fb562ffccdd1cf0f65402adb\llama-2-7b-chat.Q4_K_M.gguf. Received error [WinError -1073741795] Windows Error 0xc000001d (type=value_error)

Please help me, i really want / need this localgpt to work !, thank you for all

Thank you for all this powerfull softwares ! I have a problem with

LocalGPT Llama2-7b (w/o gui) [CUDA(tokenizing only, chat on cpu)/CPU]

i launch the first .bat to ingest a document (a pdf in folder SOURCE_DOCUMENTS)., a message shows loading document... and that's all ! console command is not freezed but nothing seems to work, i wait some hours...

so i launch the last .bat to start localgpt, i have errors :

"

CUDA extension not installed.

CUDA extension not installed.

2024-03-27 10:54:55,908 - INFO - run_localGPT.py:244 - Running on: cuda

2024-03-27 10:54:55,908 - INFO - run_localGPT.py:245 - Display Source Documents set to: False

2024-03-27 10:54:55,909 - INFO - run_localGPT.py:246 - Use history set to: False

2024-03-27 10:54:57,360 - INFO - SentenceTransformer.py:66 - Load pretrained SentenceTransformer: hkunlp/instructor-large

load INSTRUCTOR_Transformer

D:\LocalGPT\miniconda3\lib\site-packages\torch\_utils.py:776: UserWarning: TypedStorage is deprecated. It will be removed in the future and UntypedStorage will be the only storage class. This should only matter to you if you are using storages directly. To access UntypedStorage directly, use tensor.untyped_storage() instead of tensor.storage()

return self.fget.__get__(instance, owner)()

max_seq_length 512

2024-03-27 10:55:00,011 - INFO - run_localGPT.py:132 - Loaded embeddings from hkunlp/instructor-large

2024-03-27 10:55:00,260 - INFO - run_localGPT.py:60 - Loading Model: TheBloke/Llama-2-7b-Chat-GGUF, on: cuda

2024-03-27 10:55:00,260 - INFO - run_localGPT.py:61 - This action can take a few minutes!

2024-03-27 10:55:00,262 - INFO - load_models.py:38 - Using Llamacpp for GGUF/GGML quantized models

Traceback (most recent call last):

File "D:\LocalGPT\localGPT\run_localGPT.py", line 285, in <module>

main()

File "D:\LocalGPT\miniconda3\lib\site-packages\click\core.py", line 1157, in __call__

return self.main(*args, **kwargs)

File "D:\LocalGPT\miniconda3\lib\site-packages\click\core.py", line 1078, in main

rv = self.invoke(ctx)

File "D:\LocalGPT\miniconda3\lib\site-packages\click\core.py", line 1434, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "D:\LocalGPT\miniconda3\lib\site-packages\click\core.py", line 783, in invoke

return __callback(*args, **kwargs)

File "D:\LocalGPT\localGPT\run_localGPT.py", line 252, in main

qa = retrieval_qa_pipline(device_type, use_history, promptTemplate_type=model_type)

File "D:\LocalGPT\localGPT\run_localGPT.py", line 142, in retrieval_qa_pipline

llm = load_model(device_type, model_id=MODEL_ID, model_basename=MODEL_BASENAME, LOGGING=logging)

File "D:\LocalGPT\localGPT\run_localGPT.py", line 65, in load_model

llm = load_quantized_model_gguf_ggml(model_id, model_basename, device_type, LOGGING)

File "D:\LocalGPT\localGPT\load_models.py", line 56, in load_quantized_model_gguf_ggml

return LlamaCpp(**kwargs)

File "D:\LocalGPT\miniconda3\lib\site-packages\langchain\load\serializable.py", line 74, in __init__

super().__init__(**kwargs)

File "pydantic\main.py", line 341, in pydantic.main.BaseModel.__init__

pydantic.error_wrappers.ValidationError: 1 validation error for LlamaCpp

__root__

Could not load Llama model from path: ./models\models--TheBloke--Llama-2-7b-Chat-GGUF\snapshots\191239b3e26b2882fb562ffccdd1cf0f65402adb\llama-2-7b-chat.Q4_K_M.gguf. Received error [WinError -1073741795] Windows Error 0xc000001d (type=value_error)

"

can you help me ? i have 3060 RTX and Xeon E5 with 64 GB RAM?

thank you for all.

Hi, i am very interested in this engine and i want to buy it.

Someone can submit some sprites ressources (enemies, objects...) to quickly test the engine ?

I see the engine can use 3D modesl (.MD3 quake model), can you submit some simple 3D models of objects and animated enemies ?

Thank you for all,

Sincerely,

Lionel.