Play game

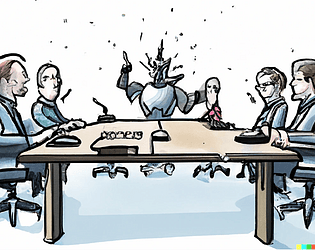

Conceptualization of the National Artificial Intelligence Regulatory Authority(NAIRA)'s itch.io pageResults

| Criteria | Rank | Score* | Raw Score |

| Novelty | #1 | 5.000 | 5.000 |

| Generality | #5 | 4.000 | 4.000 |

| Topic | #10 | 4.000 | 4.000 |

| Overall | #13 | 4.000 | 4.000 |

Ranked from 1 rating. Score is adjusted from raw score by the median number of ratings per game in the jam.

Judge feedback

Judge feedback is anonymous.

- - Nice idea, but not really the assignment: the proposal will not pause progress, but only improve general safety. It is therefore not a direct solution for AI xrisk. - Nice idea to let competitors poke holes and incentivize them to do so! - Well thought-out regulatory framework - The proposal regulates model release, not model training. X-risks in training, or in-lab experimentation, are not mitigated, which could be the highest ones. - Perhaps one could use the same institutional architecture but use it for a model training set-up? That might reduce xrisks. - I really like the core idea of incentivizing competitors to find weak points in a model. If this would work for training instead of deployment, it could really reduce xrisk. Well done!

What are the full names of your participants?

Heramb Podar

What is your team name?

Solo Aligner

Which case is this for?

Policy for pausing AGI progress

Which jam site are you at?

Online

Leave a comment

Log in with itch.io to leave a comment.

Comments

Judge feedback: A nice idea and novel proposal. It does not so much speak to the prompt (pausing AGI progress) but infrastructure along the lines of that described may be very helpful for improving responsible and safe AI development more generally.