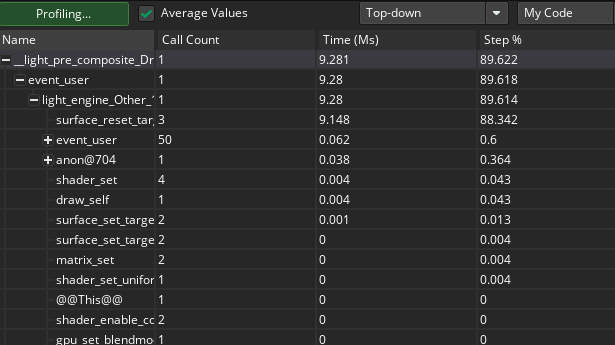

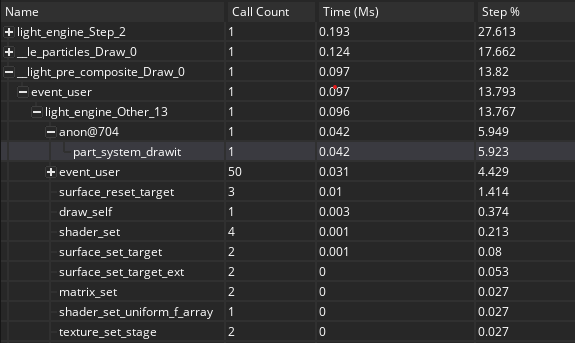

For example, I added 20 of those particle fire lights and ran the profiler:

The important ones to look at here are:

| Name | Time (Ms) |

| surface_reset_target | 0.01 |

| part_system_drawit | 0.042 |

These are great metrics, and this is with VM of course. So, it would be faster with YYC.

My computer is not a good benchmark though, because I build a new one a few months ago with a 4090 RTX and other high-end parts. I have tested Eclipse on mobile, Surface Pro (2014), and my old Linux machine/server. Never seen a problem with particles, so I'm curious what it looks like for you?