Sorry I don't have any AA recommendation. You should generally be able to find something by asking around on the official GameMaker community forums. Usually Fast Approximate Anti-aliasing (FXAA) is cheap and all you should need.

Badwrong Games

Creator of

Recent community posts

That does look normal. Remember we are essentially zooming way in on texture pages here with sprites like this. It is like in a 3D game when you get the camera as close as possible to some texture and see the actual pixels.

One thing you can do to improve it is on the light_engine object in the variables tab, change the shadow_atlas_size and shadow_map_size.

The atlas size is the total size that will be used and you should simply use the biggest your platform supports. The shadow map size is the resolution for shadow maps and bigger will look better. The more you can fit into a single atlas, the faster performance is.

And again, the better aliasing the sprite has will help.

Another improvement is adding your own anti-aliasing as a post processing effect. As you know this is common and hides jagged edges in most games. You can use a post draw event somewhere after the game world is rendered and apply it (turn off automatic draw of application surface, etc.).

That doesn't look like a problem with the example project. The circle sprite is stretched and has no anti-aliasing pixels so it will look jagged. Look around the scene and see if there are any of the HD sprites looking incorrect.

The problem you originally posted might also just be because you are rotating a low resolution sprite with no anti-aliasing.

In the example project, if you place something like the military crate objects that cast shadow and rotate it to some random angle, does it also have the same issue?

That is odd. Maybe the shadow atlas resolution is low?

Do you see any issues with the example project that comes with it? It has high resolution assets which should look correct.

Does it happen in every room too? I've often seen that once you use the in-room camera or viewports it does something that causes conflicts. Its very odd, but if you can try in a fresh project and use only a camera made through code.

If you need, get my camera manager here free: https://github.com/badwrongg/gm_camera_and_views

Get the yyz project file and import it. Then create a new local asset from it that you can reuse in any project. Using that camera should have no problems.

The object for day and night is still in the asset, just not placed in the example level since it can make it hard to see how everything else works.

The issue in the screenshot I do not know what is happening. Are you setting up a camera for the project or is it still just the default a blank project has? It might be that the way the default camera is setup things don't work correctly. Try setting up a camera through code and assign it to a view like view[0].

How are you drawing the isometric blocks? It looks like they are all on the same depth and so when the normal and material maps are drawn they "blend" together.

Eclipse doesn't fit well with isometric projection, and you'll never get the shadows 100% correct (if that matters) from that perspective.

However, if you have those all at different depths then it should separate them when the normal and materials are composed.

When two lights combine it should be brighter. There will be an update at some point that makes it easier to control that however.

The walls are receiving light because the wall does not completely block it. You need to have the light at a lower light depth than the shadow depth of the wall otherwise it will pass over it and light the walls in the other room.

Falloff now just goes from 0 to 1, so adjust that how you need.

The jittery thing might be because you use too low of a resolution in your game. I do not know how you setup your camera view and application surface/window, etc. Look at the example project included and see it is not jittery like that.

You may also need to change the shadow map size to better match your resolution.

For your light objects, I cannot see your code. This sounds like you are doing something incorrect with your light switch object. Do you have a solid understanding of the difference between an object and an instance?

In the example project you will see an object Example > Scene > shadow_precise_dynamic

That is an example of how to create what you want. It should get a precise shadow mask based on your sprite_index, and unless you did not use event_inherited in the right places, it should not require you updating anything.

Don't forget, if you are changing sprite_index then you also need to change the material_map and normal_map as well.

This could be a feature added at some point, or if you are in a hurry I could advise on how to add it. You would create a mask that is drawn to the shadow atlas for the maps of each directional light.

Ideally I think a new object would need to be created which defines an "indoor" area. Then that could be drawn to the atlas after normal shadows are rendered.

What sort of settings are you using on the lights?

Ambient intensity set to 1 would light the scene entirely and you would barely be able to see the actual lights since everything would be fully "lit" already. Is that the case when you have the ambient intensity at 1?

In the example project you can place lights and configure them with a GUI by hitting tab if you have a light selected with the mouse. This can help to understand what the settings on the lights do. You can also mess with some of the light engine settings as well by hitting spacebar.

Creating a buffer with "var" does not matter, it is still a memory leak if you do not destroy it. Having it created every single frame in the draw event is going to be much slower. If it does not move then create it once and freeze the buffer. If it moves you can create it, use and then destroy it. Or use primitive drawing if it provides the right format for the vertex...I'd have to check.

When you create any type of dynamic memory such as buffers, ds_lists, other ds structures, or surfaces, they are in memory until you destroy them manually. Declaring a local variable with "var" only holds a reference to the memory, it still exists and still needs to be managed or you'll have a memory leak. Even vertex formats can be destroyed, but those usually are very tiny and you make a couple that never need deleted until the game closes.

Note, that if something uses memory that will always persist until the game closes then you really do not have to destroy it. When the game closes the entire heap will be freed by the OS.

That's good to hear.

So, this does mean that the default draw_sprite_pos does not include vertex color information.

There are things you can do to optimize a bit and I'll write a better function at some point as well.

One thing that is important to check first though, is that you do not seem to be destroying the vertex buffer which will lead to a memory leak for sure. You can destroy it and create a new one, or if the object is not moving or anything you can create the vertex buffer on create only once and then submit it in the user event like you have. Then add some code to destroy the vertex buffer on the clean-up event.

Another thing is the macro LE_NORMAL_ANGLE performs some calculations, so instead of calling it for each vertex you should declare a local variable like "var _angle = LE_NORMAL_ANGLE;" and then use that local for the argument.

I can make the whole thing into a new object or function in the future with other optimizations.

Are you also having this cast shadow too? Given the shape I would assume you need to use something like the shadow path object to get the correct polygon shape. This might be a new object I should add that also is a shadow caster. The shadow path object type is for more complex polygon shapes, and I never considered just a skewed/stretched rectangle which is more simple.

Gotcha. I'm currently in Hawaii and have no access to my dev computer, but I'll look into it when I get back in a few weeks.

You could try using a vertex buffer or primitive in the meantime. If you look at the shadow caster objects and the one that is like "textured" or something. It is setup to use a path to define its polygon. It won't do a stretch or skew, but it should use a vertex buffer which would use the format you need and there should be enough info there to make a version like draw_sprite_pos.

Also, it is worth confirming that there is no vertex color used in draw_sprite_pos. Just make a blank project and draw with it while setting the global draw color or something. See if you can get it to actually do different image blends or something. If it actually does blend, then it is possible I just need to add something to get it working with the normal and material sprites.

I checked, there is no draw_sprite_pos_ext or anything... that seems like it should exist though. The extended version of most draw calls have image_blend, rotation, and alpha. When I add the feature I'll basically just be adding that exact function since I'd say its simply a missing feature of GM itself.

Those user events are being called by another draw event of the light engine, so they are called in draw event.

It sounds like they straight up do not use any vertex color with draw_sprite_pos then. So, it is not possible to use as is.

Instead a primitive or vertex buffer would need to be used which supplies the correct vertex color as the same as image_blend for vertex color.

I can add the feature in the future.

Ah... looking at the function, I can tell it will not work. It doesn't have an image_blend parameter. Eclipse needs that to pack the other material or normal information.

It probably uses the default image_blend of the instance, so if you set image_blend to the right material or normal values like in the other user events before calling draw_sprite_pos it should work. Just make sure to set image_blend back when doing the normal drawing.

Also the alpha value needs to be the same as in the other normal and material drawing events.

Do you have any screenshot examples to show exactly what you mean?

The light attenuation model is uses an easing function to soften the area where the falloff occurs. You could edit it in the shaders to use another easing function.

For example, in the fragment shader of shd_light_ss_hlsl on line 189 the easing is done by:

float edge = sin((1.0 - smoothstep(focus, radius + 1.0, light_len)) * M_PI_2);

You could keep just:

float edge = smoothstep(focus, radius + 1.0, light_len);

That would remove the easing and might get what you want.

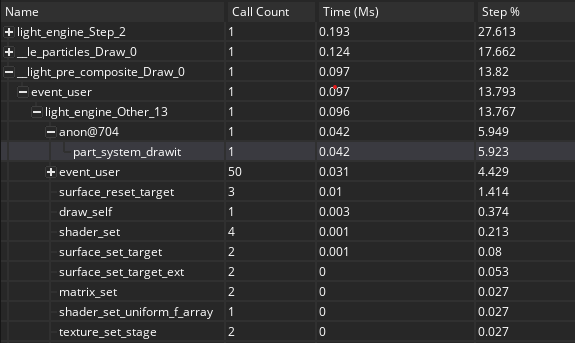

Ah, that makes some sense then. Integrated graphics are going to be extremely inconsistent depending on what rendering is being done. It is weird that it all sits at surface reset, but how they handle VRAM when its integrated would affect that.

I use a lot of multiple render target (MRT) outputs in the shaders, and I bet integrated graphics do not handle that well at all. So, yes you'll want to figure out why it decides to use your integrated graphics at all. If you do not use it at all, then I would suggest disabling it in your BIOS settings entirely. Then GM will have to choice when selecting a device.

For example, I added 20 of those particle fire lights and ran the profiler:

The important ones to look at here are:

| Name | Time (Ms) |

| surface_reset_target | 0.01 |

| part_system_drawit | 0.042 |

These are great metrics, and this is with VM of course. So, it would be faster with YYC.

My computer is not a good benchmark though, because I build a new one a few months ago with a 4090 RTX and other high-end parts. I have tested Eclipse on mobile, Surface Pro (2014), and my old Linux machine/server. Never seen a problem with particles, so I'm curious what it looks like for you?

The particles rely on GM's built-in particle system, so it could be limited by how efficient they have made things internally. Unfortunately, GM still has a very old graphic API implementation, and yes, you will find performance for lesser things will cost more than newer games like you mentioned.

To troubleshoot, please answer:

- What is the actual time in milliseconds that you can see?

- Have you ran the same test compiled with YYC? (you'd need to output your own timing or FPS values)

- What is your GPU utilization actually at when the FPS is that low? (Task Manager > Performance > GPU)

surface_reset_target() is a pipeline change, but saying that is the most costly thing is actually good, because it is not costly.

15% of the step is fine (but we really need to know milliseconds), and it is normal for rendering to cost the most performance. The question is where is the other 85% going? GM did recently change some things about particles, and there could be a lot of data going between CPU and GPU which would be slow if done poorly.

Ah, it wont be the same with sprite_index because it sets the full sprite to the single value only for metal/roughness. The actual sprite colors would come out really weird as metal/roughness. It is possible to try and edit shaders and use like grayscale values, but it will still be a bit odd I think. The material packer that comes with is your best bet at easily making materials for sprites/pixel art, as most workflows for materials are much more in-depth and require full tools for doing so.

If I understand what you are asking, you can do full image metal/roughness just by setting those values and not use a material map sprite. When the material map sprite is assigned to the same sprite_index (i.e., left as default) then the shaders will draw metal/roughness using the current sprite_index and all pixels at the metal/roughness set.

Cool. You may not really need a shader, and just setting the global draw color would work. Basically the RGB values of the draw color would be the same as intensity of the sun light. If it looks good then no need to do much more, or if you need more control then a shader might be needed.

Layer scripts are the easiest, and you can get the intensity value from the day/night object (make sure it exists or use "with").

There is no way in Eclipse too have a light only change a specific layer, object, material, etc. Something like that would require a totally separate render pass.

My suggestion would be to use layer scripts on your background and set a simple shader that takes the day/night values such as intensity as uniforms to change the background.

Currently, if you setup the layers correctly you can have backgrounds that are totally unaffected by Eclipse (covered in basic setup tutorial). So, all you would need to do is setup your background like that and add layers scripts.