Aaah, I see, you were going for minimalism rather than RISCyness.

(IIUC (which I'm not really sure about), RISC's "reduced instruction" is not really about having a reduced (as in small) set of instructions, or about each instruction word being reduced (small), but about the instruction itself (as in the operation it performs) being reduced to its essentials (as in not doing more than it has to).

Basically, not doing several operations with one instruction, in terms of what the processor would have to do behind the scenes to complete the instruction - and in particular not optional steps that could be done with other instructions instead. (Hence ending up with things like a load/store architecture.)

I think the underlying idea is that by making each instruction do just one thing, it's much easier to make each instruction execute quickly, so that the processor can instead be made to execute more instructions per second, for a higher total data throughput - thus getting more done, more efficiently (since it takes less hardware to implement).

This doesn't mean that the instructions can't do complicated things (like, say, a step of AES encryption) - just that the instruction for it shouldn't also do other things. IIUC that is.)

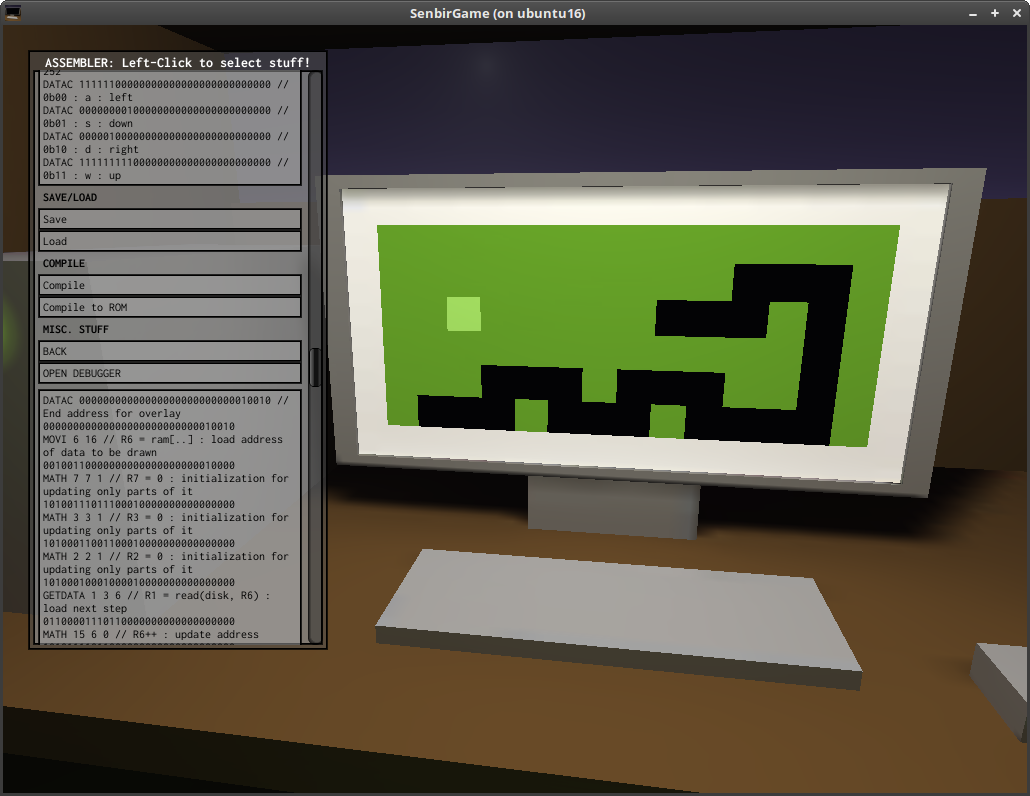

Regarding your RISC-06 ISA: I think I like it. It's certainly interesting.

I haven't fully grasped the entire instruction set yet, or how exactly to do much with it (like your win screen), but that would probably come with actually attempting to write something in it. At first glance I would think that having only two registers would be severely limiting, but I guess some of the instructions being able to instead directly use memory alleviates that (also some useful values being quickly available via specific instructions).

Also, numbers never going above 255 probably makes them easier to reason about. Besides, limitations can be inspiring, which might be another reason it seems easier.

One thing I might suggest, though, to make it easier to work with, would be to consider acknowledging that it doesn't really have fixed-width (3-bit) opcodes - instead, it has variable-width opcodes (I've seen ones I would consider to have 3 (HLT), 4 (MOV 0), 5 (BRN 0), 7 (OPR 4), and 8 (OPR 0 0) bits) - and setting up/naming assembly instructions accordingly to represent the operation and to simplify the arguments.

Re: the C++ VM implementation: nice! Well done. Good luck adding the rest! :) (I haven't done C++ myself.)

Re: reg+imm: well, I'll try to explain it in detail, but the short answer is that all the parameters are required, and the processor actually doesn't decide between registers and immediate-mode, it always uses both (and thus always does the same thing for that instruction).

I think an example might help; let's go with MUL for now, the others work equivalently.

1000: MUL reg4 reg4 reg4 imm16 // MUL dst src1 src2 val

// dst = src1 * (src2 + val)

The first column is the opcode for the instruction, here 1000, followed by the name that is used for it in assembly code, here MUL.

The rest of the line, up to the // comment, describes the type and bit width of the parameters to this instruction. There are three distinct types:

- reg : register, these bits name a register to be used for this parameter

- imm : immediate, these bits constitute an immediate value that is used as-is

- zero : zeroes, these bits should be zero (only used in NOP; maybe ign (for ignore) would be better? I'm not sure)

In other words, this instruction has 4 parameters, the first three are 4 bits wide each and refer to registers, while the last is 16 bits wide and is an immediate value.

The comment on the first line shows the assembly instruction with its parameters again, but this time shows the names of the parameters instead of their types. They are in the same order as the first time, which is also the order they would be specified in when using the instruction in assembly code. (I haven't yet 100% decided upon the field ordering inside the binary instruction word.)

So, the first parameter is named "dst" and refers to a register. The next two are named "src1" and "src2" respectively, and also refer to registers. The last one is named "val" and is a 16-bit immediate value.

The second comment line (slightly indented) shows the operation performed by this instruction, in a higher-level language pseudo-code.

Translating to prose, this means that MUL sets the dst (destination) register to the result of multiplying the src1 (source) register with the sum of the src2 register and the val immediate value.

In short, this is an add-and-multiply instruction - a concept I'm pretty sure I've stolen from somewhere, though I can't remember quite from where exactly.

In assembly code, it would look something like this:

MUL 2 3 4 10

which would take the value of register 4, add 10 to it, multiply the result with the value of register 3, and store the result of that in register 2: R2 = R3 * (R4 + 10)

As noted under the instruction list, most of the immediate values can be negative, so this is also a valid instruction:

MUL 2 2 0 -1

which would do R2 = R2 * (R0 - 1) = R2 * -1 and thus negate R2 (since R0 is always 0).

ADD similarly requires 4 arguments (so "ADD 1 5" is not actually valid code), and works equivalently - add val to src2, then add that to src1, then save the result in dst.

So, to move the program counter forward by 5 (to skip the next 5 instructions), you could do this:

ADD 1 1 0 5

which works because R0 is always 0, so it becomes R1 = R1 + (0 + 5) = R1 + 5

Worth noting at this point is that R1 starts out pointing at the address immediately after the ADD instruction, which is why this skips 5 instructions, instead of skipping 4 and running the 5th. Adding 0 is thus a no-op.

(This also means that moving backwards requires higher numbers than moving forwards - subtracting 0 is also a no-op, subtracting 1 is an infinite loop, and subtracting 2 jumps back to the instruction immediately before the current one. (If I had an explicit instruction for relative jumps, it might work differently, but setting R1 is essentially manipulating the internal state of the CPU directly, so no such niceties here.))

To be honest, I expect that most uses of the arithmetic instructions will have a zero in one of the two last arguments, depending on whether it wants to use a register value or an immediate value, but the CPU doesn't care - it always does the same thing: adding them together before applying the main operation.

Re: MMU, I agree that having one would probably be very nice, but you may want to take a look at how they typically work before you make too many plans about how to emulate one.

The details differ between MMU models, but from what I've seen (which admittedly isn't much), they typically require you to set up some data structures in main memory (to define the memory mapping(s)), usually with some specific alignment (for speed reasons), and then you have to tell the MMU where that data structure is, and enable it. Sometimes there are other settings too, like ways to enable/disable parts of the mapping for fast context switching, but that's model-specific.

Another thing the MMU needs is some way to call the kernel when a page fault happens, including a way to tell it which address caused the fault, and unlike most platforms the TC-06 doesn't have any standard calling conventions (e.g. for interrupts) to rely on for that. I guess we'd need a way to store the fault handler address at minimum, and maybe some other things for various details.

I suppose you could make the offset register (what OFST manipulates) instead be a pointer to that MMU data structure, which enables the MMU when set. But that would completely break backwards compatibility with older programs that already use OFST (like your kernel), since it would suddenly work completely differently and trying to use it in the old way would probably make the system crash (since the MMU would suddenly be pointed at garbage data). Changing the way the OFST instruction itself works (parameters etc.) would cause similar issues.

Unless you don't care about BC breaks, I'd say a new opcode would be a better idea than overriding OFST, as at least it wouldn't have those BC issues - well, unless and until you change how the MMU works in such a way that that instruction would have to change as well, but it might be possible to plan for at least some of that.

I've been thinking that the device API (GETDATA/SETDATA) would be nicer for this, because it already has addressing we could use for multiple "registers" for those various pieces of required data, and it's sort of built in to the device concept that a device might not be present or has been replaced with a different one. That's just my thinking though, you might feel differently about it.

I had another idea for at least part of the problem, though - namely that most of those values could probably be stored in memory, linked to the mapping data structure we point the MMU to. Then those mappings could be set up to protect those settings (along with the mappings themselves) so that any user programs can't mess with them, only the kernel can. This may cause some wasted memory if the alignment doesn't match up perfectly, though. Also, that still leaves the initial pointer to that data structure without a safe place to live, so we'd still need one of the other solutions for that - and then we might as well use that solution for the rest, too.

On the other hand, if the MMU can protect memory, it can probably protect its own registers as well, and simply ignore any disallowed SETDATA calls (or complain to the kernel about it).

An interesting point from a security point of view is that the user program shouldn't be able to change the mappings, but the kernel should, so then we somehow need to transition from user mode privileges to kernel mode privileges without letting the user mode program switch the mode on its own (privilege escalation), despite it being in control of the CPU... Luckily there's a fairly simple solution, if the MMU has the right feature. (Namely having it switch mappings automatically right before calling the kernel's page fault handler. Then the mode switch happens by triggering a page fault, which the user mode program cannot do without transferring control to the kernel.)

Of course, none of that really matters if we don't think this kind of security is necessary in Senbir. If we assume that programs are never malicious and are always well-behaved (won't try to mess with the MMU), then we don't really need to protect it. (I'd prefer not to assume that, though.)

Re: the storage quandary: yeah, personally I'd probably do the safe and slow thing too, for much the same reasons. Many others wouldn't, though, whether because they didn't think of it or because they cared more about performance... Lots of examples of that. On the other hand, though, I suppose most of those people would never play Senbir to such a depth that it mattered anyway...