Yup they seem to have been indexed pretty quickly after I made this post. 🥳

James William Fletcher

Creator of

Recent community posts

Such as my coin pusher: https://pushergames.itch.io/tuxpusher

It's been available for a long time, I think all of my games should be indexed by now?

Other games of mine lacking indexing:

Pretty much most of my games have never been indexed, which I think is pretty bad.

I made sure I did all the meta-data and tags etc.

Hey your hitboxes for the play buttons on Ball and Flagman are broken, you might want to check the others too. I am using Chrome Browser. https://itizso.itch.io/retrofab

Clickable but very thin hitbox above the button.

These are very good btw well done.

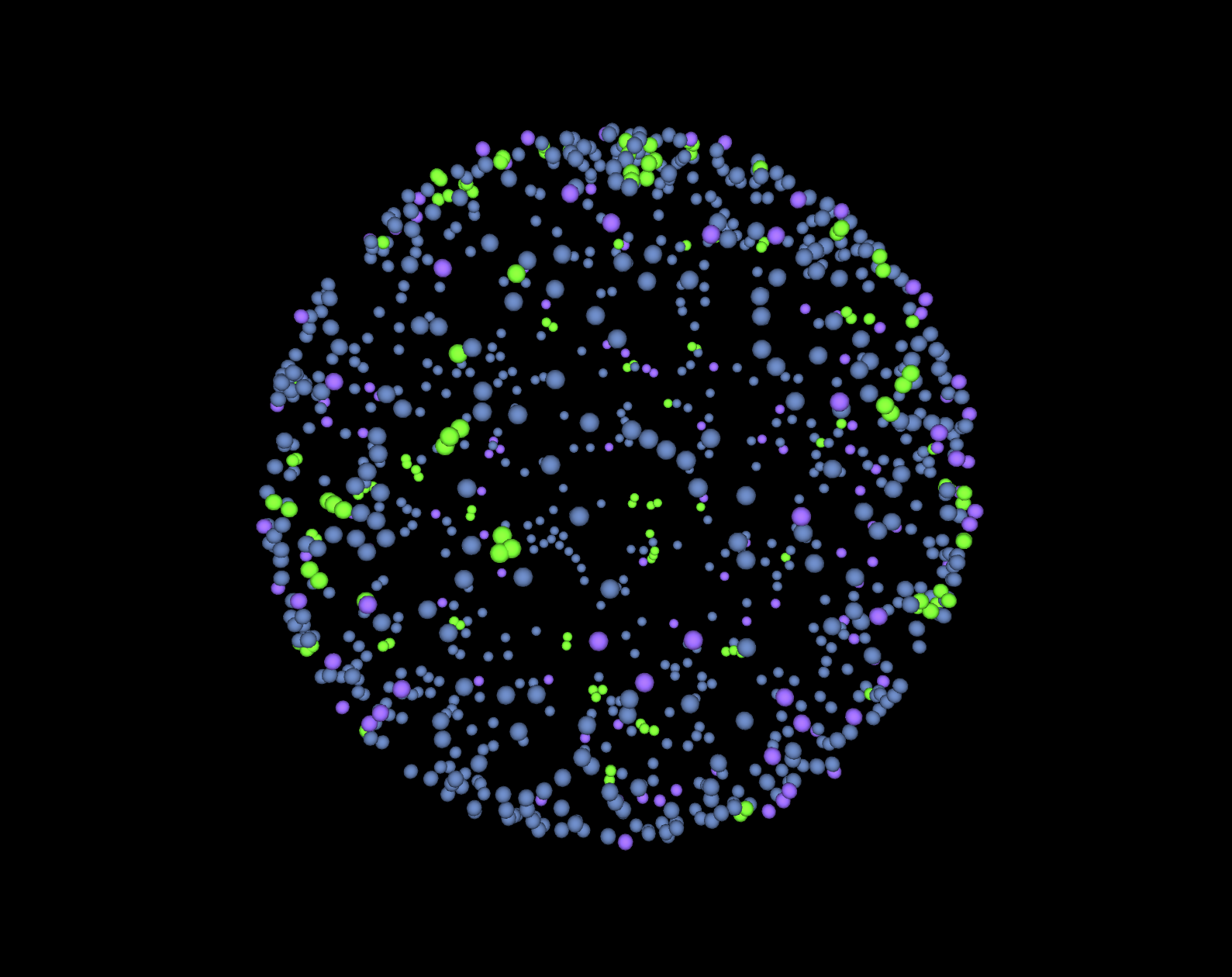

3D Music Visualisation:

https://pushergames.itch.io/autechre (Abstract / Experimental)

https://pushergames.itch.io/catsteps (Braindance?)

https://pushergames.itch.io/phonkasphere (Phonk)

https://pushergames.itch.io/cyberdyne (Phonk)

I've reached the limit for text content of my original post so I will be adding the auxiliary/uncategorised additions here.

Various other related projects to Text-To-3D and Image-To-3D:

https://github.com/pals-ttic/sjc

https://github.com/ashawkey/fantasia3d.unofficial

https://github.com/Gorilla-Lab-SCUT/Fantasia3D

https://github.com/baaivision/GeoDream

https://github.com/thu-ml/prolificdreamer

https://github.com/yuanzhi-zhu/prolific_dreamer2d

https://github.com/nv-tlabs/GET3D

https://github.com/alvinliu0/HumanGaussian

https://github.com/hustvl/GaussianDreamer

https://github.com/songrise/AvatarCraft

https://github.com/Gorilla-Lab-SCUT/tango

https://github.com/chinhsuanwu/dreamfusionacc (simplified implementation)

https://github.com/yyeboah/Awesome-Text-to-3D

https://github.com/atfortes/Awesome-Controllable-Generation

https://github.com/pansanity666/Awesome-Avatars

https://github.com/pansanity666/Awesome-pytorch-list

https://github.com/StellarCheng/Awesome-Text-to-3D

https://nvlabs.github.io/eg3d/

https://research.nvidia.com/labs/toronto-ai/nglod/

https://pratulsrinivasan.github.io/nerv/

https://jasonyzhang.com/ners/

https://github.com/eladrich/latent-nerf

https://github.com/nv-tlabs/LION

https://github.com/abhishekkrthakur/StableSAM

https://github.com/NVlabs/nvdiffrast

https://github.com/NVlabs/nvdiffrec

https://www.nvidia.com/en-us/omniverse/

https://blogs.nvidia.com/blog/gan-research-knight-rider-ai-omniverse/

https://github.com/maximeraafat/BlenderNeRF

https://github.com/colmap/colmap

https://github.com/EPFL-VILAB/omnidata/tree/main/omnidata_tools/torch

https://github.com/isl-org/MiDaS

https://github.com/isl-org/ZoeDepth

https://github.com/nv-tlabs/nglod

https://github.com/orgs/NVIDIAGameWorks

https://github.com/3DTopia/3DTopia

https://github.com/3DTopia/threefiner

https://github.com/liuyuan-pal/PointUtil

https://github.com/facebookresearch/co3d

https://github.com/BladeTransformerLLC/gauzilla

https://github.com/pierotofy/OpenSplat

https://github.com/chenhsuanlin/bundle-adjusting-NeRF

https://github.com/maturk/BARF-nerfstudio

https://lingjie0206.github.io/papers/NeuS/

https://github.com/CompVis/stable-diffusion

https://github.com/NVlabs/eg3d

https://github.com/rupeshs/fastsdcpu

https://github.com/3DTopia/LGM

https://github.com/VAST-AI-Research/TripoSR

https://github.com/ranahanocka/point2mesh

https://github.com/Fanghua-Yu/SUPIR

https://github.com/Mikubill/sd-webui-controlnet

https://huggingface.co/blog/MonsterMMORPG/supir-sota-image-upscale-better-than-m...

https://depth-anything.github.io/

https://github.com/philz1337x/clarity-upscaler

Over the years I have seen various software that will render 3D models as 2D isometric art come and go, but currently the Drububu.com Vozelizer seems to be the main way to achieve this effect.

Is anyone aware of any other software that can produce 2D isometric art from 3D assets, or maybe even plugins for existing 3D modelling software such as Blender? If so please do share in this thread.

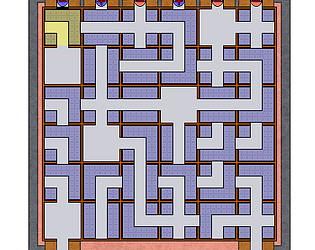

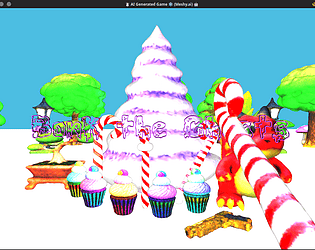

I am remaking a 2D game I made in 2009 in a fresh coat of new 3D AI generated graphics!

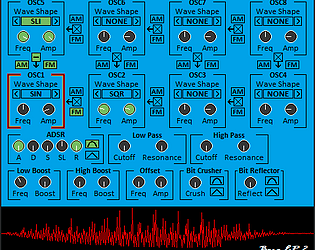

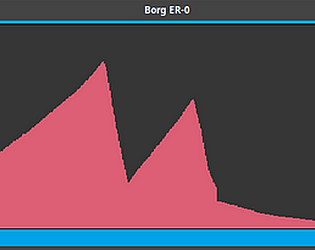

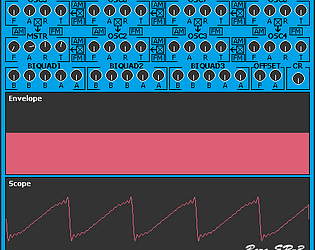

I maintain a small repository of free sound effects I have made with my Borg ER-3 tone generator project: https://github.com/mrbid/Sound-Effects

These are free to use, although I tend not to use sound in my games these days because I find it a bit tacky and annoying in video games, I can finally appreciate why adults used to get so frustrated by the crunchy noises coming from my GameBoy as a child.

Other sound effect archives you may be interested in:

1001 Sound Effects (*free)

App FX (paid)

BBC Complete Sound Effects Library (paid)

BBC Rewind Sound Effects (*free)

Sound Ideas Sond Effects Library 1000 (paid)

Sound Ideas released a lot of sound effect packs, such as the Warner Bros Sound Effects Library.

And ofc... https://freesound.org/

My first ever sound effect libraries where:

https://www.amazon.co.uk/Sound-Effects-Vol-Machines-Movement/dp/B000M4RCX2/

https://www.amazon.co.uk/Sound-Effects-Vol-Nature-Animals/dp/B000M4RCXC/

https://www.amazon.co.uk/Sound-Effects-Vol-People-Sounds/dp/B000M4RCXM

https://www.amazon.co.uk/Sound-Effects-Vol/dp/B000M4RCXW/

Most sound effects are not game-ready and need to be mastered with software such as Audacity.

Websites where you can download free and paid human generated 3D assets:

https://downloadfree3d.com (my favourite)

https://www.turbosquid.com

https://www.cgtrader.com

https://sketchfab.com

https://www.thingiverse.com

https://free3d.com

https://poly.cam/explore

https://3d.si.edu/explore

Although these days I am more into 3D content from generative AI (text-to-3D):

https://imageto3d.org

https://meshy.ai

https://lumalabs.ai/genie

https://www.sudo.ai/3dgen

https://www.tripo3d.ai/

https://3d.csm.ai/

So much so that I have created two assets packs of hand picked content from two of these generative services:

https://archive.org/details/@mrbid

https://archive.org/details/meshy-collection-1.7z (800 unique assets)

https://archive.org/details/luma-generosity-collection-1.7z (3,700 unique assets)

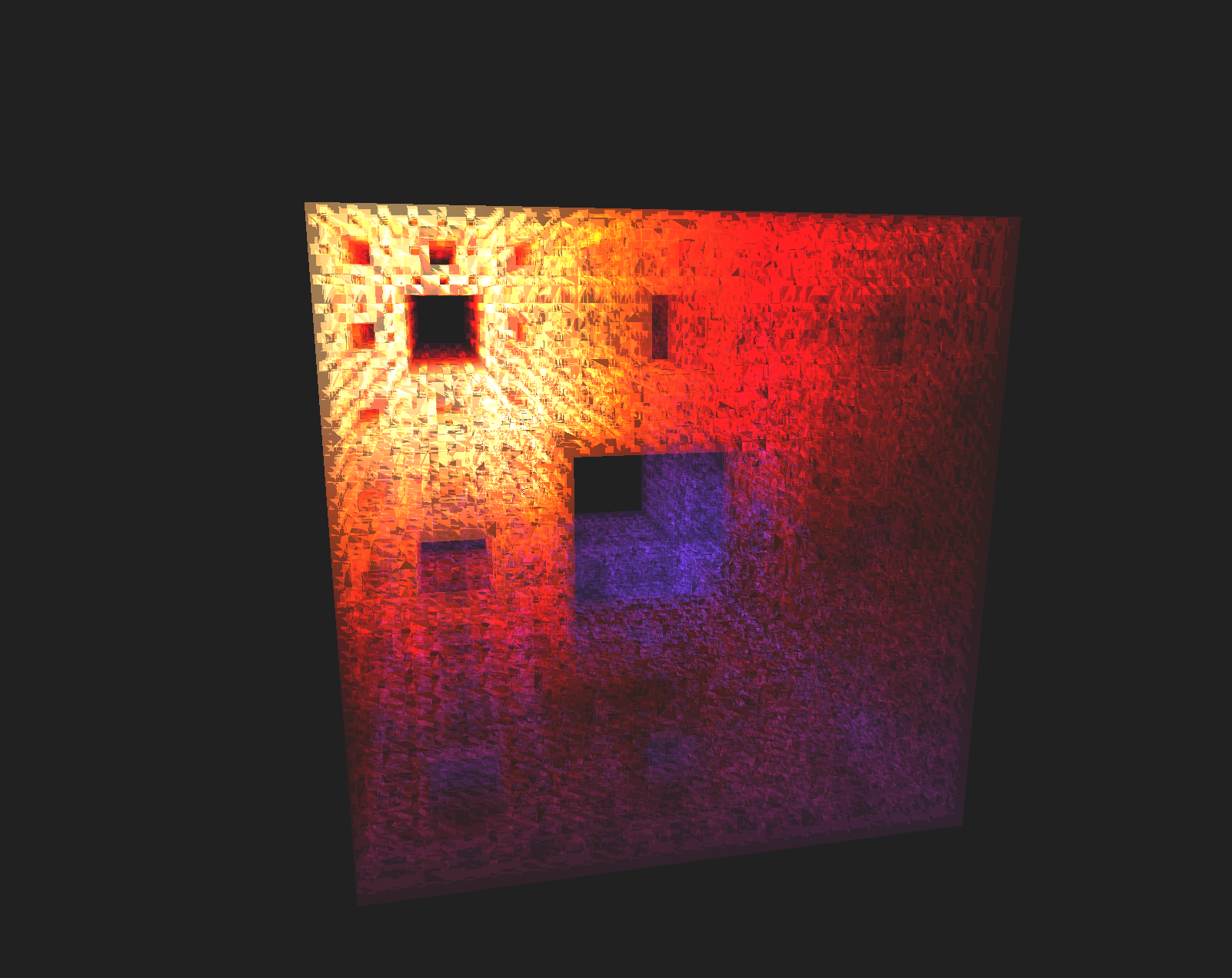

Details concerning Generative AI:

The forefront/SOTA of this technology is maintained by a project called ThreeStudio at the moment.

Typically what happens is Stable Diffusion is used to generate consistent images of the same object from different viewing angles however regular Stable Diffusion models are not capable of this and so Zero123++ is used for this purpose. Once these images of the object have been produced from different view/camera angles they are then fed into a Neural Radiance Field (NeRF) this will output a point-cloud of densities and NerfAcc is commonly used for this purpose by most projects. Finally the point cloud is turned into a triangulated mesh using Nvidia's DMTet.

Stable-Dreamfusion can be attributed as the first project that really kicked off this academic field for text-to-3D solutions, and while there are pre-canned image-to-3D solutions available currently they tend not to perform quite as well as most public text-to-3D solutions.

If you are interested in learning more about generative 3D here are some links you can followup:

Various papers and git repositories related to the topic of text-to-3d:

https://paperswithcode.com/task/text-to-3d

https://github.com/topics/text-to-3d

Pre-Canned solutions to execute the entire process for you:

https://github.com/threestudio-project/threestudio (ThreeStudio)

https://github.com/bytedance/MVDream-threestudio

https://github.com/THU-LYJ-Lab/T3Bench

https://arxiv.org/pdf/2209.14988.pdf (DreamFusion)

https://dreamfusion3d.github.io/

https://arxiv.org/pdf/2211.10440.pdf (Magic3D)

https://research.nvidia.com/labs/dir/magic3d/

https://arxiv.org/pdf/2305.16213.pdf (ProlificDreamer)

https://arxiv.org/pdf/2106.09685.pdf (has a LoRA step)

https://ml.cs.tsinghua.edu.cn/prolificdreamer/

https://arxiv.org/pdf/2303.13873.pdf (Fantasia3D)

https://fantasia3d.github.io/

https://research.nvidia.com/labs/toronto-ai/ATT3D/

https://research.nvidia.com/labs/toronto-ai/GET3D/

Stable Diffusion:

https://easydiffusion.github.io/

https://civitai.com/

https://nightcafe.studio

https://starryai.com/

https://dreamlike.art/

https://www.mage.space/

https://www.midjourney.com/showcase

https://lexica.art/

Zero-shot generation of consistent images of the same object:

https://github.com/cvlab-columbia/zero123

https://zero123.cs.columbia.edu/

https://github.com/SUDO-AI-3D/zero123plus

https://github.com/One-2-3-45/One-2-3-45

https://one-2-3-45.github.io/

https://github.com/SUDO-AI-3D/One2345plus

https://sudo-ai-3d.github.io/One2345plus_page/

https://github.com/bytedance/MVDream

https://mv-dream.github.io/

https://liuyuan-pal.github.io/SyncDreamer/

https://github.com/liuyuan-pal/SyncDreamer

https://www.xxlong.site/Wonder3D/

https://github.com/xxlong0/Wonder3D

The above Zero-shot generation models tend to be generated from a dataset of 3D objects, at the moment Objaverse-XL is the largest dataset of 3D objects being 10+ million in size, although this does include data from Thingiverse which has no color or textural information. (these are datasets of download links to free 3D content, not datasets of the actual content itself)

https://github.com/allenai/objaverse-xl

https://objaverse.allenai.org/

The Neural Radiance Field (NeRF):

https://github.com/NVlabs/instant-ngp

https://github.com/Linyou/taichi-ngp-renderer

https://docs.nerf.studio/

https://github.com/nerfstudio-project/nerfacc

https://github.com/eladrich/latent-nerf

https://github.com/naver/dust3r

(CPU NeRF below)

https://github.com/Linyou/taichi-ngp-renderer

https://github.com/kwea123/ngp_pl

https://github.com/Kai-46/nerfplusplus

NeRF to 3D Mesh:

https://research.nvidia.com/labs/toronto-ai/DMTet/

https://github.com/NVIDIAGameWorks/kaolin/blob/master/examples/tutorial/dmtet_tu...

A lot of good resources can be found at: https://huggingface.co/

I've also written a Medium article which has a more wordy version of what I have written here with some image examples: https://james-william-fletcher.medium.com/text-to-3d-b607bf245031

If you are into the Voxel Art aesthetic you can Voxelize any 3D asset using the Free and Open Source Drububu.com Voxelizer or ObjToSchematic.

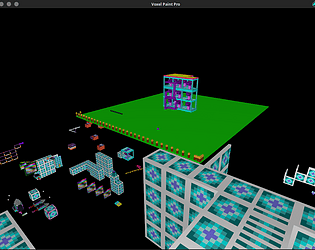

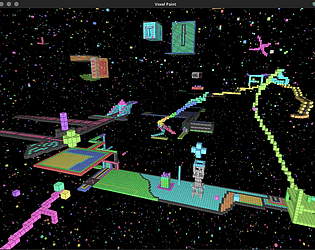

I maintain a project that allows users to create Voxel art in the web browser and export it as a 3D PLY file, called Woxel. It's like a simplified MagicaVoxel / Goxel but with a Minecraft style control system.

Although Woxel isn't the only voxel editor that runs in a Web Browser, there are more listed in my article here! And I have a more comprehensive list of Voxel Editors here.

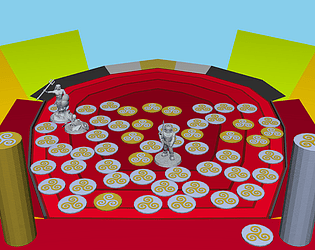

Tux Pusher is a re-production of the classic seaside arcade coin pushers, you have gold and silver coins, silver can be released automatically holding the left mouse button down but the gold coins must be played one at a time with individual clicks. The gold coins are your reserve coins. The aim of the game is to collect all 6 Tux Penguin figurines.

Thank's for reading!