Hey folks! This is my first post here. I'm currently working on a personal project (tentatively) called Bestiarium, which is still in early prototyping phases, so it means I don't know if it will be ever finished or not (it might simply turn out to not be fun in the end). My first step was this small toy that I published here on itch.

I'm reblogging this from my personal blog, which I hope is not a problem - I'm doing this because I'm trying to find a platform for these articles/tutorials where it actually helps other developers the most, hence why copying it here and not just posting an external link. I wasn't sure if I should post this in this area or on "General Development", but anyway, if you think this kind of stuff is interesting for me to keep a devlog on, let me know :)

After generating procedural chess pieces, the obvious step to take would be full blown creatures. There's this game idea I'm playing around with which, as every side project, I may or may not be doing - I'm an expert in Schrödinger's gamedev. This one even has a name, so it might go a longer way.

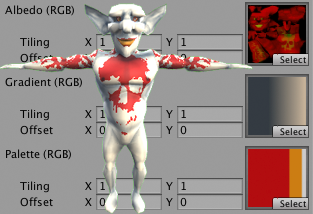

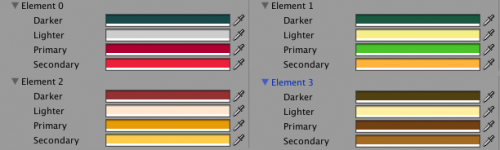

Bestiarium is deeply dependent on procedural character generation, so I prototyped a playable Demon Invocation Toy - try it out before reading! In this (quite big) post, I'll talk about some of the techniques I've experimented with. Sit tight and excuse the programmer art!

Part 1: Size matters

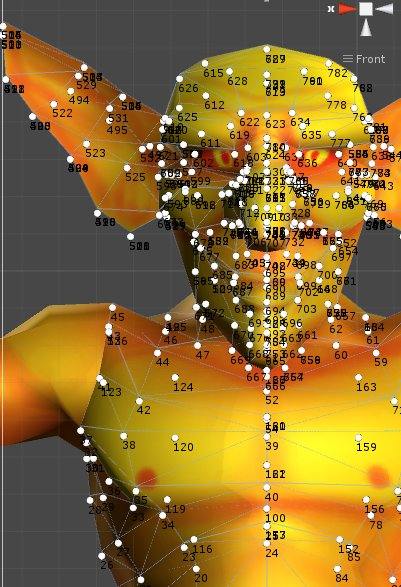

One thing I always played around with in my idle times when I worked on the web version of Ballistic was resizing bones and doing hyper-deformed versions of the characters (and I'm glad apparently I'm not the only one that has fun with that kind of thing). Changing proportions can completely transform a character by changing its silhouette, so the first thing I tried out was simply getting some old models and rescaling a bunch of bones randomly to see what came out of it.

One thing you have to remember is that usually your bones will be in a hierarchy, so if you resize the parent in runtime, you will also scale the children accordingly. This means you will have to do the opposite operation in the children, to make sure they stay the same size as before. So you end up with something like

private float ScaleLimb(List<Transform> bones, float scale) {

for (int i = 0; i < bones.Count; i++) {

bones[i].localScale = new Vector3(scale, scale, scale);

foreach (Transform t in bones[i]) {

t.localScale = Vector3.one * 1 / scale;

}

}

return scale;

}

But that leads to another problem: you're making legs shorter and longer, so some of your characters will either have their feet up in the air, or under the ground level. This is a thing that I could struggle with in two ways:

- Actually research a proper way of repositioning the character's feet via IK and adjust the body height based on that.

- Kludge.

I don't know if you're aware, but gambiarras are not only a part of Brazilian culture, but dang fun to talk about if they actually work. So I had an idea for a quick fix that would let me go on with prototyping stuff. This was the result:

Unity has a function for SkinnedMeshRenderers called BakeMesh, that dumps the current state of your mesh into a new one. I was already using that for other things, so while I went through the baked mesh's vertices, I cached the one with the bottom-most Y coordinate, and then offset the root transform with that amount. Dirty, but done in 10 minutes and worked well enough, so it allowed me to move on. Nowadays I'm not using the bake function for anything else anymore, so I could probably switch it to something like a raycast from the foot bone. Sounds way less fun, right?

Part 2: variations on a theme

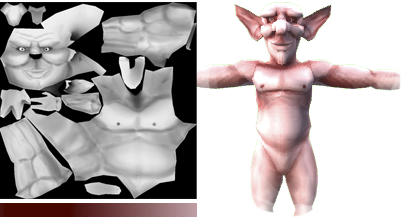

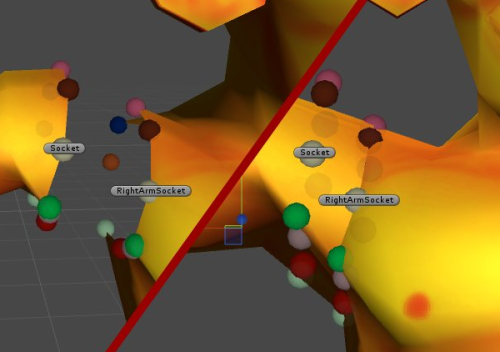

I started looking into modular characters, but I ended up with a problem: modularity creates seams, so it looks great on clothed characters (e.g.: it's easy to hide a seam for hands in shirt sleeves, for the torso in the pants etc). In Bestiarium, however, I want naked, organic creatures, so the borders between parts have to look seamless.

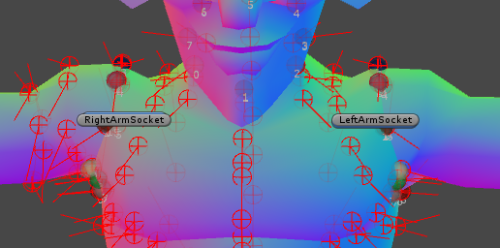

This is probably the problem I poured most of my time into, and yet, I couldn't find a good solution, so I timed out on that. The basics are easy: make sure you have "sockets" between the body parts that are the same size so you can merge the vertices together. But merging vertices together is way more complicated than it seems when you also have to take care of UV coordinates, vertex blend weights, smoothing groups etc. Usually, I ended up either screwing up the model, the extra vertices that create the smoothing groups or the UV coordinates; I even tried color coding the vertices before exporting to know which I should merge, but no cigar. I'm pretty sure I missed something very obvious, so I'll go back to that later on - therefore, if you have any pointers regarding that, please comment!

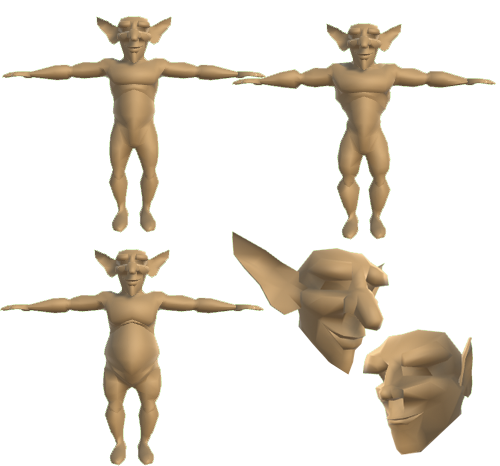

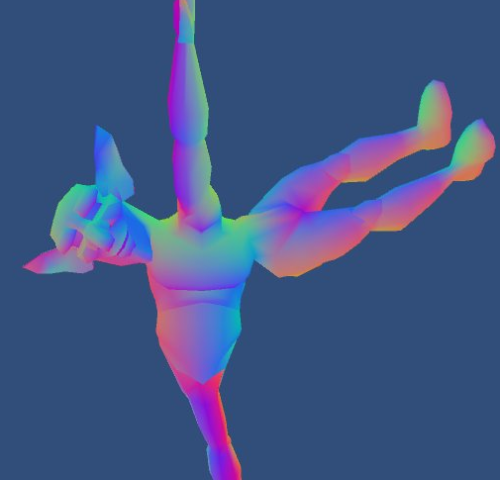

However, since I wanted to move on, I continued with the next experiment: blend shapes. For that, I decided it was time to build a new model from scratch. I admit that the best thing would be trying out something that wasn't a biped (since I've been testing with bipeds all this time), but that would require a custom rig, and not having IK wouldn't be an option anymore, so I kept it simple.

The shapes were also designed to alter the silhouette, so they needed to be as different from the base as possible. From the base model, I made a different set of ears, a head with features that were less exaggerated, a muscular body and a fat one.