The only thing I get when trying to interact with any of the NPCs is "The LLM service has encountered an error: an error occurred while sending the request" I downloaded the model, renamed and placed it into /KoboldCPP. After starting Silverpine.exe, the command window flashes 'Failed to execute' and later in the line is 'koboldcpp'. Any ideas?

If you only have 6 gb of graphics memory combined with 16 gb of ram, it might choke while loading the model if you have your other applications taking up too much ram.

If you have exactly 10 gb of graphics memory, the game will fully offload the model to the GPU which should take 9.17 gb, but I have no way of verifying if this actually works under real-world conditions since I only have 8 gb myself.

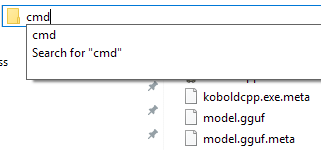

To see what the problem is, open a command prompt in the StreamingAssets folder like on the picture and do this:

koboldcpp.exe --model "model.gguf" --usecublas --gpulayers 17 --multiuser --skiplauncher --highpriority

Replace --usecublas with --usevulkan if you don't have an Nvidia GPU.

Replace 17 with 27 if you have 8 gb of vram, or 43 if you have 10 gb or more.

This way it won't close when it throws the error.

If it's running out of memory it should throw something like:

ggml_vulkan: Device memory allocation of size 1442316288 failed.

ggml_vulkan: vk::Device::allocateMemory: ErrorOutOfDeviceMemory

llama_kv_cache_init: failed to allocate buffer for kv cache

llama_new_context_with_model: llama_kv_cache_init() failed for self-attention cache

You can use the KoboldCPP GUI to manually adjust things until it works if this is the case, since the game just use whatever KoboldCPP process is running in the background if you launch the game while KoboldCPP is already running.

Do let me know what the problem is though so I can fix it for the next version.

I'm running 32GB RAM and 12GB VRAM on a RTX3060. Would be happy to do any testing of things that you can't do yourself. I know nothing about programming, but I can follow instructions and report back flawlessly. Here's the traceback info that command came up with --

Traceback (most recent call last):

File "koboldcpp.py", line 4451, in <module>

main(parser.parse_args(),start_server=True)

File "koboldcpp.py", line 4039, in main

init_library() # Note: if blas does not exist and is enabled, program will crash.

File "koboldcpp.py", line 427, in init_library

handle = ctypes.CDLL(os.path.join(dir_path, libname))

File "PyInstaller\loader\pyimod03_ctypes.py", line 55, in __init__

pyimod03_ctypes.PyInstallerImportError: Failed to load dynlib/dll 'C:\\Users\\me.DESKTOP-R1SATEP\\AppData\\Local\\Temp\\_MEI122842\\koboldcpp_cublas.dll'. Most likely this dynlib/dll was not found when the application was frozen.

[10308] Failed to execute script 'koboldcpp' due to unhandled exception!

---------------------

Using --usevulkan receives this response------

Traceback (most recent call last):

File "koboldcpp.py", line 4451, in <module>

main(parser.parse_args(),start_server=True)

File "koboldcpp.py", line 4099, in main

loadok = load_model(modelname)

File "koboldcpp.py", line 871, in load_model

ret = handle.load_model(inputs)

OSError: [WinError -1073741795] Windows Error 0xc000001d

[11968] Failed to execute script 'koboldcpp' due to unhandled exception!

------Let me know what you figure out, when you're able. Thanks.

I double-checked everything on my end, so my only guess at this point is that's your're using an ancient CPU without AVX2 support, or something is preventing KoboldCPP from creating the _MEI folder in temp.

If you're using a very old CPU (pre 2013 for intel and pre 2015 for AMD) you could try replacing your koboldcpp.exe with https://github.com/LostRuins/koboldcpp/releases/download/v1.76/koboldcpp_oldcpu.... and/or selecting "Use Vulkan (Old CPU)".

If you're not using an ancient CPU you could try disabling your antivirus, but I doubt that's the reason.

It appears I'm running an ancient CPU. Just using --usevulkan didn't help, but I did get it going by downloading the alternate release of Koboldcpp. I changed the file name to koboldcpp.exe and replaced the original version, however it won't start with the silverpine.exe. Instead, I have to start koboldcpp with "koboldcpp.exe --model "model.gguf" --usevulkan --gpulayers 43 --multiuser --skiplauncher --highpriority"(I found that I can use --usevulkan OR --usecublas with the alternate koboldcpp) from the command line, wait for that to load, and then start silverpine.exe. Figured I'd mention in case it's something you care to remedy. The only other hiccup I've come across so far is when conversing with Gareth, he'll give a response, then immediately I get asked if I want to buy the shed, and after clicking 'no', I get another response from him as though I had sent a blank input after his initial response. Beyond that, I am thoroughly impressed with how well the LLM works with whatever prompting and settings you have coded into the game, as the responses given are not only coherent, but intelligent, on point, and the memory throughout a conversation is excellent. Keep it up. Can't wait to see some male characters or maybe even a customizable one for the player to use.