Disable your antivirus then. It really shouldn't be flagging it though.

Three Eyes Software

Creator of

Recent community posts

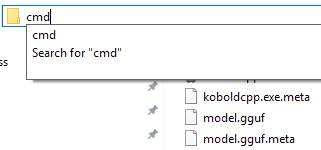

Go into Silverpine_Data\StreamingAssets and open a command prompt there.

Then do: koboldcpp.exe --model "model.gguf" --usecublas --gpulayers 17 --quiet --multiuser 100 --skiplauncher --highpriority

Replace --usecublas with --usevulkan if you have an AMD GPU.

Replace 17 with 27 if you have 8 gb of vram, or 43 if you have 10 gb or more.

This will make it show the full error without closing.

There is none right now.

The NPCs are prone to lying because it's an inherit property of LLM based AI.

If two different NPCs give you wildly different answers to a question without having exchanged information/having overheard the conversation by being nearby, it's not part of their knowledge database and they made it up.

You could try moving the entire game folder to a users folder like downloads so it doesn't need any special permissions, though I don't think that's the problem since it looks like it's creating an access violation trying to read from a 64 bit memory adress.

I can't say anything about this beyond that since searching for this problem doesn't really return anything of substance, and KoboldCPP is third-party software which I didn't develop.

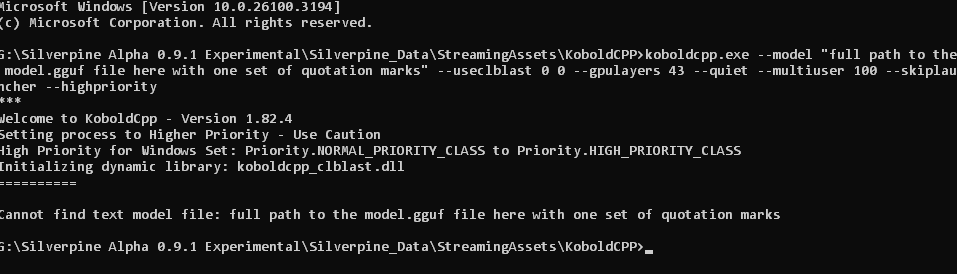

"full path to the model.gguf file here with one set of quotation marks" should be replaced with "G:\Silverpine Alpha 0.9.1 Experimental\Silverpine_Data\StreamingAssets\KoboldCPP\model.gguf" here, assuming you didn't move the folder.

Oops, I forgot the koboldcpp.exe before the arguments, but yes, you already posted the error. There's a single search result which turned out to be blasbatchsize related, but blasbatchsize defaults to 512 for Vulkan which isn't a problem.

You can try this which uses OpenCL instead of Vulkan:

koboldcpp.exe --model "full path to the model.gguf file here with one set of quotation marks" --useclblast 0 0 --gpulayers 43 --quiet --multiuser 100 --skiplauncher --highpriority

Otherwise I'm out of ideas other than a driver update since it's an unspecified exception.

I've added a build that uses a more recent version of KoboldCPP. It might fix the issue.

If it doesn't fix it, open a command prompt inside the KoboldCPP folder like on the screenshot

Then run:

--model "full path to the model.gguf file here with one set of quotation marks" --usevulkan --gpulayers 43 --quiet --multiuser 100 --skiplauncher --highpriority

Which will show you the full error instead of closing the window when the exception happens.

Since windows doesn't seem to provide a way to capture the output of processes without redirecting it and hiding the command prompt completely, the game can't display the actual error by itself.

Does the command prompt open and then do nothing for forever like for kuu24? Everything should set itself up automatically.

- You're prompted to choose server or GPU

- You choose GPU

- The game tells you that both files are missing

- The game downloads KoboldCPP

- The game downloads the AI model

- A command prompt opens and KoboldCPP loads the model, which should take 2 minutes at most depending on your drive speed

You shouldn't have to manually do anything with the .exe at all.

If the command prompt opens and then does nothing after "High priority for Windows Set...", perhaps there's a problem with this older version of KoboldCPP and the newest Nvidia drivers? I have no way of verifying this myself because I use a 1070.

Does the command prompt open and then close by itself?

Can you further describe your issue specifically?

You're right, the ranged weapon logic did break at some point. Thanks for reporting it.

Also the shed thing is probably not a bug but simply the fact that you don't actually unlock the shed if Gareth gives you the key to it through other means in RP without selling it to you, it's a purely cosmetic item in 0.8. This will be a feature in 0.9 though.

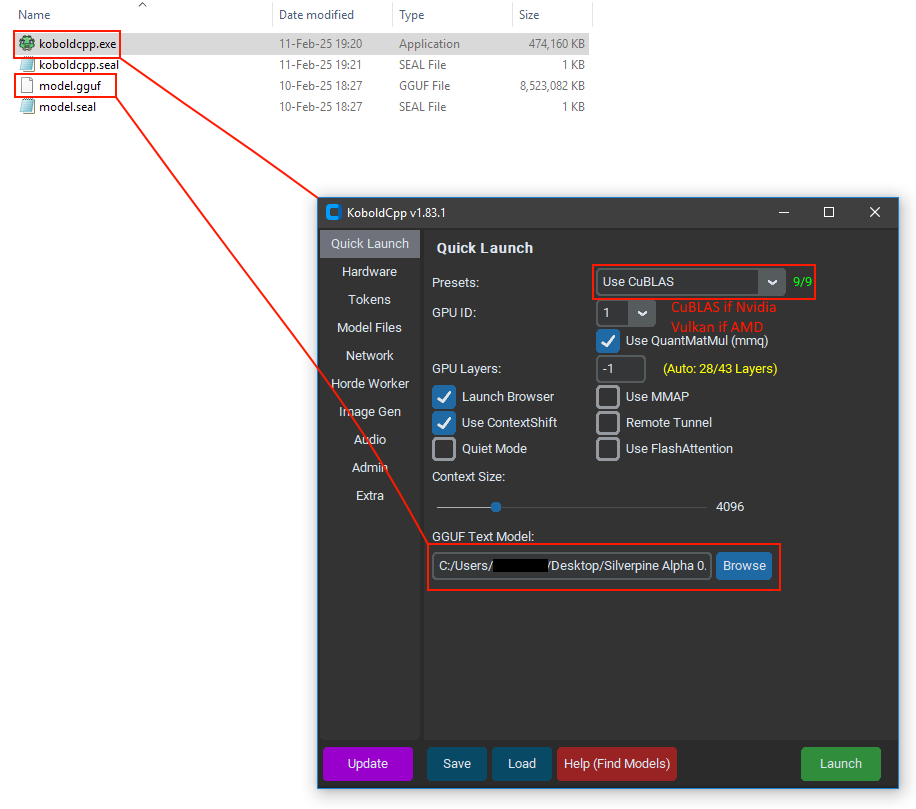

Try replacing the koboldcpp.exe file in Silverpine_Data\StreamingAssets\KoboldCPP with this new version https://github.com/LostRuins/koboldcpp/releases/download/v1.83.1/koboldcpp.exe.

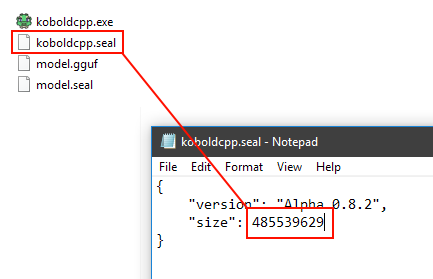

Replace the 467832398 inside koboldcpp.seal (just open it with a text editor) with 485539629 or the game will think koboldcpp.exe has been corrupted.

I'm not sure what could be the issue if it just sits there instead of crashing.

All the player character information (minus the sprites themselves, only the paths to the files) gets baked into the save file when you start a new game. It's not read from the JSON file every time. You can't change description/scale/offset/racials on an existing save file.

I will change this next version though since it is intuitive.

I can't replicate the issue. There isn't anything that could be throwing a hidden exception due to e.g. permission problems at some unfortunate point either, since being able to select the custom character means it has been fully setup successfully, and after that it would throw a window with an error message at you if something were to go wrong.

On a side note, do you think it'd be possible for the player to have the option to have a dialog image portrait for them for being clothed versus nude?

I won't add this for the default characters, but I can make this an option for custom characters.