If you only have 6 gb of graphics memory combined with 16 gb of ram, it might choke while loading the model if you have your other applications taking up too much ram.

If you have exactly 10 gb of graphics memory, the game will fully offload the model to the GPU which should take 9.17 gb, but I have no way of verifying if this actually works under real-world conditions since I only have 8 gb myself.

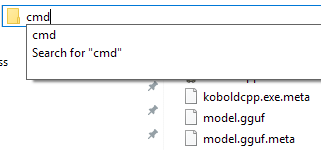

To see what the problem is, open a command prompt in the StreamingAssets folder like on the picture and do this:

koboldcpp.exe --model "model.gguf" --usecublas --gpulayers 17 --multiuser --skiplauncher --highpriority

Replace --usecublas with --usevulkan if you don't have an Nvidia GPU.

Replace 17 with 27 if you have 8 gb of vram, or 43 if you have 10 gb or more.

This way it won't close when it throws the error.

If it's running out of memory it should throw something like:

ggml_vulkan: Device memory allocation of size 1442316288 failed.

ggml_vulkan: vk::Device::allocateMemory: ErrorOutOfDeviceMemory

llama_kv_cache_init: failed to allocate buffer for kv cache

llama_new_context_with_model: llama_kv_cache_init() failed for self-attention cache

You can use the KoboldCPP GUI to manually adjust things until it works if this is the case, since the game just use whatever KoboldCPP process is running in the background if you launch the game while KoboldCPP is already running.

Do let me know what the problem is though so I can fix it for the next version.