This is very helpful, finally I can filter out the AI stuff. Thanks a lot :)

However, I don't see the "No AI Tag" in my project infobox after setting it up. Am I missing something?

Viewing post in Generative AI Disclosure tagging

From a design philosophy and as a user, I would not want to see a "no-ai" metainfo in that box.

You also do not want to see a "not made with unity", "not made with rpg maker", "no touchscreen input", and so on.

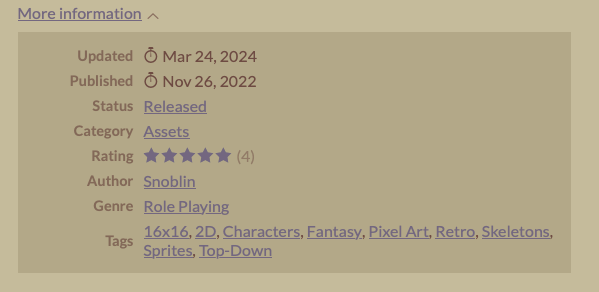

Leafo called it tag, and it is in the suggested tag box, and it shows as an url modifier with tag, but it seems to be metainfo. Like "made-with-godot".

Me too. And that tag was a regular tag before as well, as far as I know. It was bound to be, what with Itch's free range tagging system.

So yeah, I expect the info box to show the meta info about tool usage in the future. There seems to be much development in that regard. Itch now autodetects certain engines as well.

I just hope they will not show the no-ai as info in all the games. Maybe for assets it is appropriate. For regular games it would be distracting. It should show the usage of AI, but not the non usage.

Is all AI stuff really that bad? I guess if it's just randomly code generated by AI then it can be bad. I've tried to utilize AI to tell an endless possibilities interactive fiction game. It was very difficult to build the infrastructure, UX, and logic to support the AI content driven by a user's choices (choose your own adventure style).

I see this as the future (long way out) of AAA RPG games as well. If done correctly, AI can extend or make endless side quests that are meaningful and on par with hand crafted AI assets but with even more integration and specified to your character's actions/equipment/history/looks etc...

If you want, check out how I crafted 3 different AI models to build a story-driven game tailored by a user:

Agreed! That would be great and is doable right now. As a developer, you'd have to store all the metadata and feed the AI information and instructions, per character. It is something that can be done and some "chat" style games have done before in a singular way. The future will be fun where you can chat with any NPC and have a full conversation with an AI that sticks to that NPCs character/background/emotions. Can't wait!

If you play my game, put the difficulty on "Infinite" and it gives you a free form text box to forge your own path through the story instead of the AI generated options. It's a glimpse into how it would work. AI is good at handling your input and trying to keep the story going at the same time. I've instructed my AI to try and keep the story moving along but take the user's input into account. It's not perfect but it's the worst it will be....

The issue isn't whether AI/LLMs can generate interesting and useful game content. (They almost can; it still needs a lot of human oversight, but we are reaching a point where they can generate simpler types of content and art without more than a normal proofing check.)

The actual issues include:

- Whose content is being used to train the AI, and whether that content was gathered ethically (or even legally),

- Is the AI being used by indie creators to fill in the gaps of their own skills/abilities, without which the content would never be created or released, or is it being used by corporations that are trying to reduce their human wages in order to increase shareholder profits - for content they could budget for, but instead will use to drive profits rather than reduce the cost of the finished product

- The amount of damage generative-AI databanks & processors are doing to the environment, which is nearing cryptocurrency/NFT levels.

Much like crypto, there's no profit-making endgame here. Gen-AI is plenty useful for brainstorming, for making a rough outline to fill in later, for draft boilerplate, for sketch ideas, for clipart-style images. One of the best uses I've seen is "generate a newspaper article about X topic" to be thrown at players in a TTRPG - it doesn't matter if the article sounds bland or the details are a little off. The GM doesn't have to spend half an hour writing 300 words of "in 1982, a similar creepy incident happened to this college's football team." AI is great at generating flavor text, as long as the details don't matter AND a human is checking the results for basic consistency. (The AI will not notice that parent & child should share the same last name.)

But it's being used to generate real news articles, without human oversight, and for those, it matters that the "facts" aren't real. And the amount of processing and cross-checking required to auto-generate accurate content is beyond our current level of computers - and beyond the level we expect to have.

The hostility against AI content isn't driven by "this makes terrible text/art." Plenty of indie developers make terrible text and art. The hostility is that the people promoting AI - most of the companies funding it - are trying to use it to remove humans from their development process so they can increase corporate profits, not release tools that can enhance individual creativity.When they realize there's never going to be a jump to "this can write entire books that actual people want to read/create movies people will pay to watch by throwing some keywords into a generator," that funding will evaporate and the public-usable tools will vanish along with the money.

I agree with your first bullet point and that's a question for ethics and legal practitioners. As an end-user of a product (like ChaptGPT), we shouldn't have to worry (we can still be concerned) about labially of using a tool. This debate likely isn't going anywhere any time soon.

Your second bullet is a little more nuanced. Technology has ALWAYS been used to optimize process, remove humans, and have lower operating costs. Think how Disney animations were 50 years ago... thousands of animators. Now, a handful of animators with powerful technology. Excel replaced bookkeeping jobs, word processors replaces typists and stenographers, self check-out systems, ATMs, agriculture machines/autonomy, manufacturing robots, SaaS platforms... This list goes on. This isn't really all that different. Why employee hundreds of side quest writers when an AI infrastructure can write better, more dynamic, content on the fly? There will be places for this, like there are still a lot of those professions I mentioned above, just not as many as before.

Environmental concerns on using AI is an interesting one. I think we could have said this about all newer technology. Everyone now has a computer in their home, a refrigerator, etc... Not to go down that road again but there's precedence. Do I think it's good, no. However, the world is slowly shifting to renewable energy that is more concentrated with the location the energy is needed, keeping any impacts local and creating less risk/need on infrastructure for others. The energy resource needs are growing rapidly and won't slow down but so are the technologies and infrastructure that's helping generate the new energy. Ironically, AI will help reduce energy waste and optimize grids (like Tesla's autobidder software) where waste is rampant.

AI should continue to get better and is probably the worst it will ever be. This will only heighten this notion around AI taking away jobs and because of corpo greed (it IS about greed, but that's capitalism, in the US) but if history tells us anything, we will find ways to adapt and people will find new and interesting jobs that coincide with new technologies and tools. Even AI.

*disclosure: I'm an optimist in general and choose to see the positive effects of a new technology while acknowledging the negatives