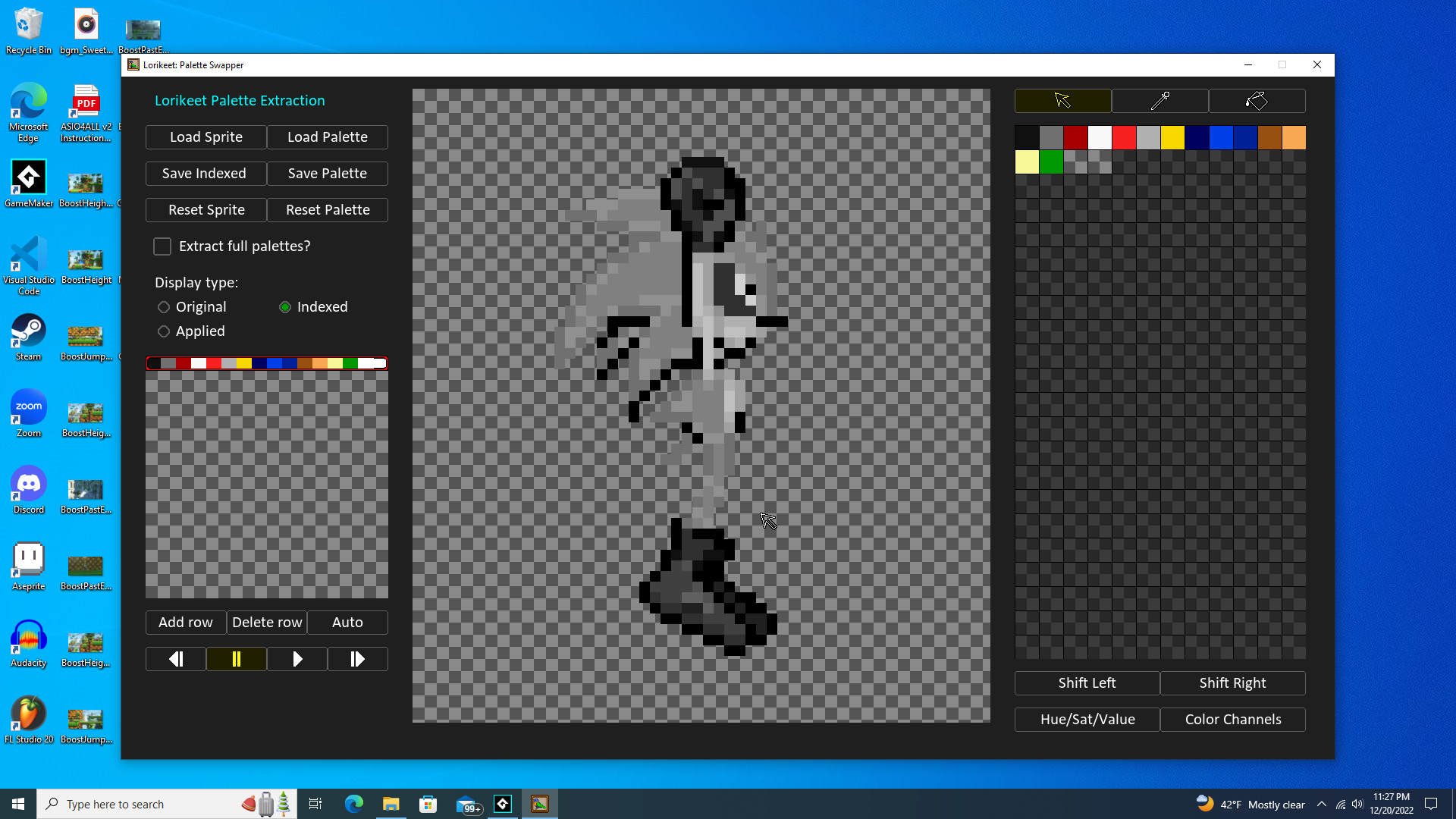

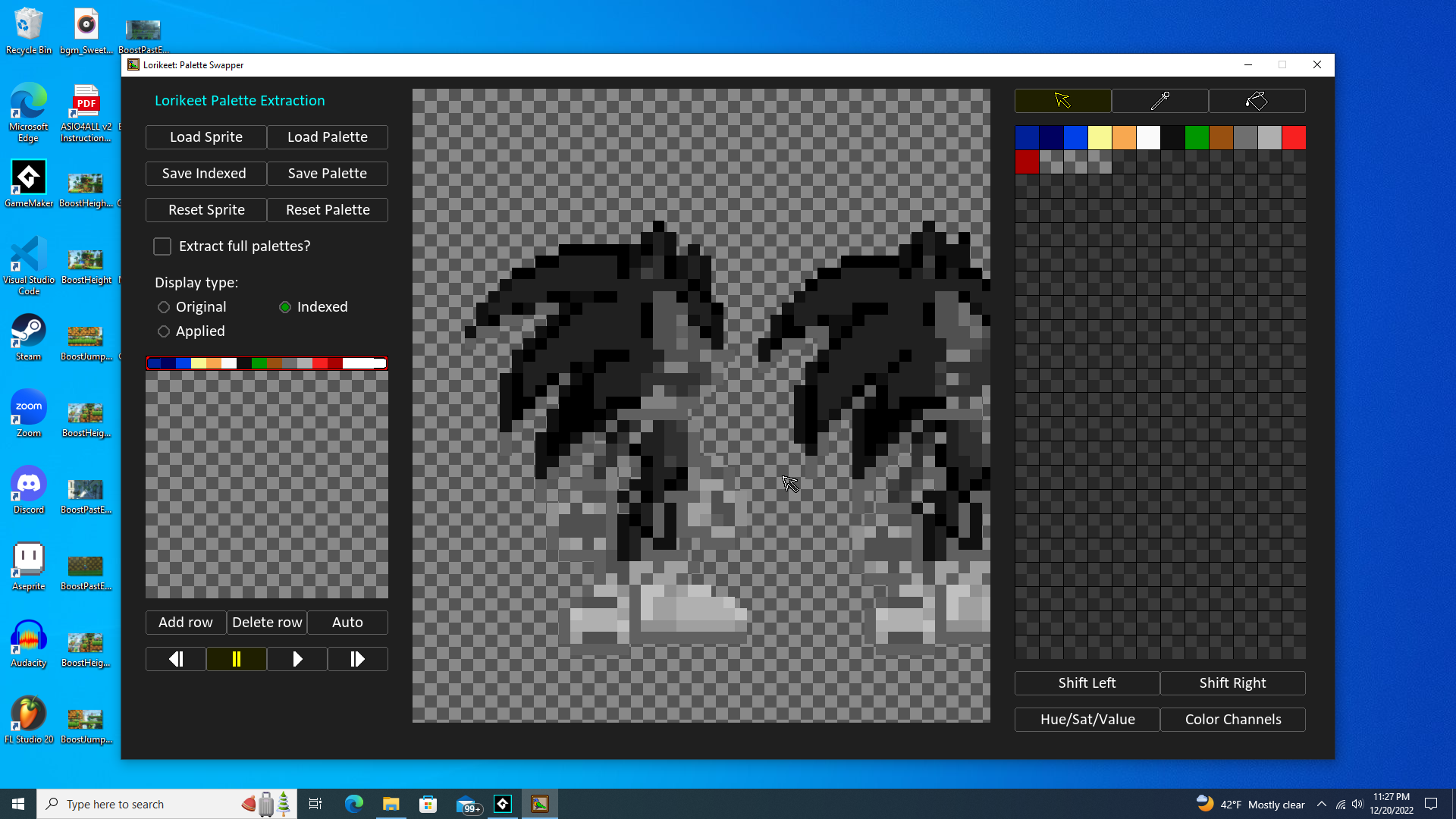

Would you be able to explain how the conversion of the default sprite to its indexed format is performed? Lorikeet looks like a great tool and I wanted to integrate into a project I'm working on, but I'd like to create an "all-in-one" shader that first performs the index color conversion on a sprite's pixel and then calls the "GetLorikeetColor" function on that converted pixel value to get the correct palette value. Basically, I'm trying to do everything at runtime instead of using the editor to convert my base sprites to their indexed versions first (there are a quite a lot of sprites in my project and it would take a while to do that for all of them).

I tried combining the Lorikeet shader with the standard NTSC accurate grayscale shader on GameMaker's website, but I quickly realized that isn't what you used to perform the index conversion, so it didn't work. I'd appreciate some insight!