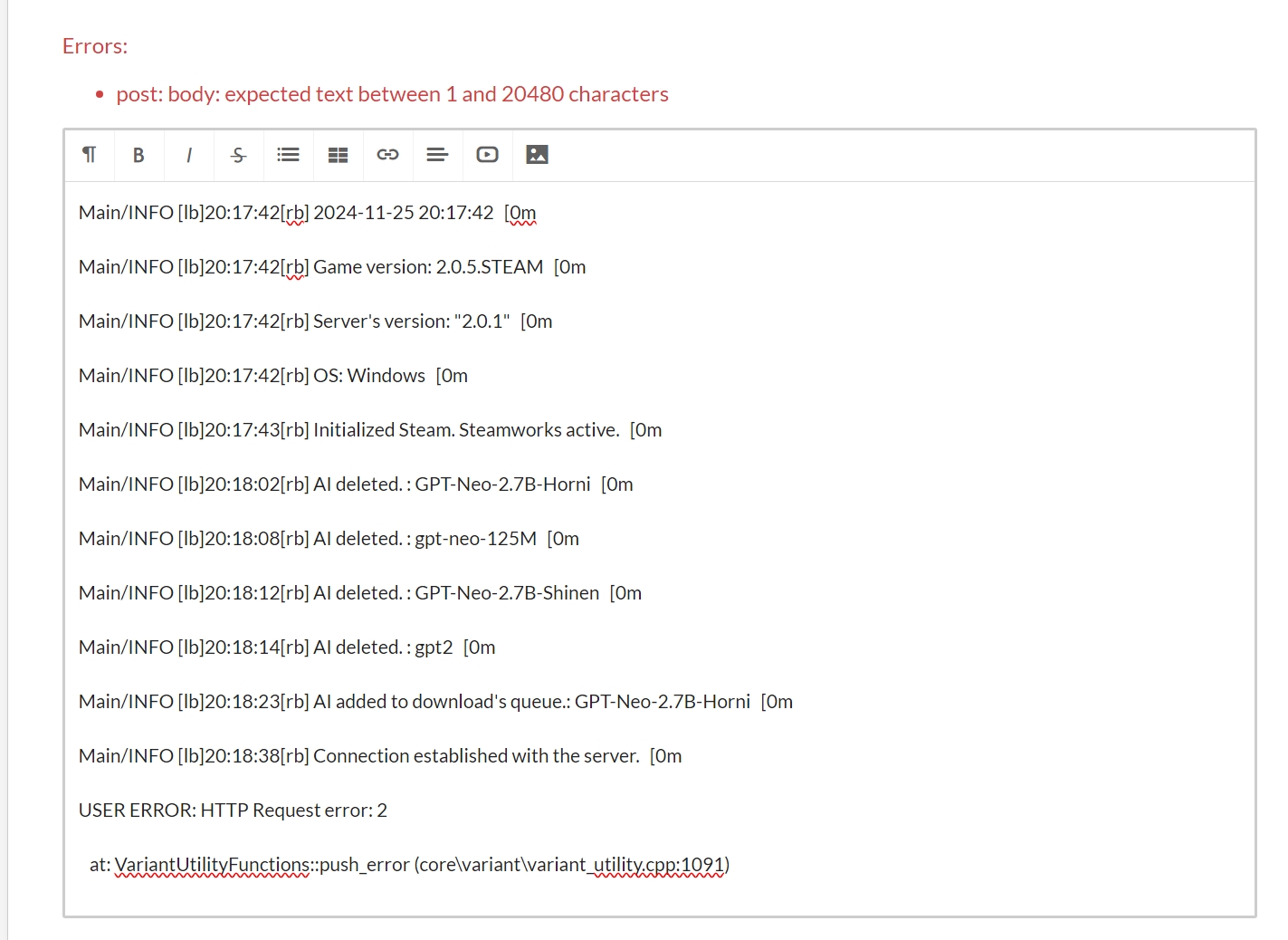

If you encountered any problem, you can ask for help here. The support is for both demo and paid versions :).

Attach logs to your post!

Without logs, I might not be able to help you.

Where to find the game’s logs?

- On Windows -

%APPDATA%/Roaming/aidventure - On Linux -

~local/share/godot/app_userdata/AIdventureOR~.local/share/godot/app_userdata/AIdventure